Table of Contents

About

The current section are extracts for a future to-be-published book written by Wizardry and Steamworks that works to fill the gap between the technological domain of computers and politics, a gap that due to capital concentration becomes ever-smaller, such that it is imperative that people involved with politics, especially if they are involved in governance, are made aware of the emerging dangers, pitfalls and made able to disambiguate misinformation spread pertaining to the domain of technology. The initiative itself, takes place in a context where the proverbial "tulip fever" of "green energy" back in the 70s when "peak oil" was deemed to be a danger on the event-horizon, but with amplified and even stronger significance when applied to computing and the low-cost high spread and high impact of computers on society that makes such bubbles segmented yet repeatable as technology progresses.

For now the section will explain basic concepts, with the hopes of helping politicians understand technological concepts with the technology, for the purpose of this book, being framed into a "political context" by insisting on the social and/or economical impact of the technology rather than "the technology itself". Here and there, the terminology might go in depth but only for the sake of completeness, and the focus should still be on the political impact of the technology being explained.

The Politics of Artificial Intelligence

Origin

The current Artificial Intelligence (AI) boon is based on a long-existing and forgotten trope, namely the Eliza bot developed by Joseph Weizenbaum in 1967 at MIT that leveraged some psychological primitives in order to befool its users into believing that the chat bot was, in fact, human operated. Eliza kicked up quite a kerfuffle historically speaking, with lots of world-wide drama taking place world-wide as a response, with people swearing they were actually talking to a robot instead of a human being. Later iterations of Eliza, one example from retro-computing being "Racter" (Mindscape, 1986) for the Commodore Amiga, were also additional to using psychological primitives, loaded with knowledge, very similar to what ChatGPT is now, in order to also add the element of offering "insightful responses" with knowledge that the chat interlocutor might not have known. Pure "Eliza" by contrast is mostly just an elegant way of rotating sentences around in order for the chat interlocutor to think they are talking to a real person. None of this is new.

As a followup, if you like, one of the funniest events in post-modern human history is Deepblue defeating Kasparov at chess, another drama that led to Kasparov threaten to sue the venue, going as far as claiming that he was, in fact, playing a human being that would be somehow hidden within the computer.

In principle, both these events, and in general, hinge of a misunderstanding of what A.I. is and with particular attention to engineering more than computer science.

Computer Engineering & Science

In what regards computer engineering, A.I. is coalesced with a bunch of terms that it should not be. Similarly, computer science has some precise definitions of terms that also tend to get coalesced with A.I.

Computer Science

In order to make the situation clear, the following holds true to the limit of current human knowledge (including A.I.) has not solved the issue:

"given a set of inputs and a program, it is undecidable whether the program will ever terminate"

Which is the definition of the "halting problem" leading back to Leibnitz 1671, the mathematician, that thought to devise a machine that would automatically compute proofs for him. Similarly, this is also Gödel theorem on undecideability or "das Entscheidungsproblem".

What is essential to retain is that a cognisant A.I. that would perform a task with a desired output cannot be built until this fundamentally mathematical problem is solved (not even necessarily pertaining to computers).

You can, however, build something that, depending on the set of inputs, might produce some output, yet it is not determinate that whatever that something outputs will also be desired or useful.

This is very critical because you do not want to entrust "A.I." to drive a spaceship that "might or might not" land on the moon, under the guise that the "A.I." might somehow know anything more than matching up and correlating patterns, which is more of what one would call, a probabilistic crap-shoot not too far away from shooting someone out of a huge honking space cannon and hoping they get to the moon!

You could stop here, all of the rest is irrelevant and only a filler judging on smaller cases and disambiguation of the term "A.I." and what it is used for.

"Das Entscheidungsproblem"

Translate plainly, the term means, "the problem of deciding" which is the fundamental problem why A.I. would never be able to "write software". In order to explain this, you would need a simpler language than English and something more abstract to understand the problem so here are two examples very easy to relate to.

The Mathematica Program

"Mathematica" is some software developed by Wolfram that compared to standard "scientific calculators" can be given as input a formula and the software is designed to apply mathematical operations in order to reduce the input formula and solve the problem. For example, you could write an integral equation and "Mathematica" will apply all methodologies in order to expand and reduce the expression symbolically, without substituting numbers like "scientific calculators" would do in order to produce a numeric result.

However, just like in real life, it is completely possible for some polynomial to be crafted that cannot be reduced or in reducing that polynomial, the polynomial instead expands, which turns out to be very frustrating for the person solving the problem. That person will have to "empirically decide" when it is time to stop, backtrack at some point where that person thinks a different path could have been chosen and then perhaps try again. Similarly, it is possible for polynomials to exist that cannot be reduced (of course, within the contraints of a context, for instance natural numbers instead of imaginary).

What happens in those situations with "Mathematica" when solving or reducing an quation is that the software includes a timer that at some point will prompt the user with a soft-error along the lines: "spent ... amount of time attempting to solve, but could not find a solution [convergence], should I stop or continue?" at which point the user has to decide whether the problem they have input to the "Mathematica" is "just too tough to solve" or perhaps the machine has insufficient power to solve the problem.

For that reason, A.I. cannot solve problems and it cannot write software.

However, "modern programming" and in particularly for large programs, especially using Object-Oriented languages frequently need "templating" or creating stubs for an API, all of which are mostly very thin files without much content and just identifiers that "just have to exist" in order to match the overall aspect of the program (and also there for further development). Creating these files is very laborious and it is something that can be handled by code generation, which, depending on the sophistication of the algorithm, can just very well be an A.I. However, the idea that an A.I. will write code from scratch that would make sense, for non-trivial and not-known problems, is just ridiculous.

Other examples include just plain code generation like Intellisense in IDEs, and all the way up to Clippy included in Microsoft Office back to the 90s, that are useful but will not sentient in any way, nor do they solve "the problem of deciding".

The Fixed-Point Combinator

The fixed-point combinator in lambda calculus is a special function that can be used to implement recursion. Consider a lambda term  and the fixed-point combinator

and the fixed-point combinator  , with the following application of

, with the following application of  to

to  with two

with two  -reductions and a substitution that take the expression to its

-reductions and a substitution that take the expression to its  -normal form:

-normal form:

The problem is that without the substitution in the second step, the application of the Y-combinator to the lambda term  would have never halted:

would have never halted:

and instead would have expand forever.

Using lambda calculus that is a Turing-complete language is perhaps the best way to demonstrate the effects of the halting problem or the dependability problem or "Das Entscheidungsproblem" (from Kurt Gödel back in 1978). It is also additionally a hard-NP problem that simply has not yet been solved and even the possibility of a solution seems unlikely.

Racking a bazillion computers on top of each other and connecting a gorrilion networks together will just not solve the issue such that the halting problem will be solved.

Engineering

In computer engineering, whatever people refer to as "A.I." gets split into other notions such as:

- algorithm,

- expert system

- A.I.

where "algorithm" covers most of what politicians refer to as "A.I.", examples include:

- "lights turning on during night time", which is just a "heuristic" (to be understood as, "arbitrary" and not fixed) decision that is taken on a series of inputs, for instance, depending on, say, light sensors, computed time of day, etc,

- "chess", given two perfect chess players, the outcome of chess will always be a stalemate (like tic-tac-toe on a smaller scale, not provenly-(or StarCraft but on a much larger scale)), chess is a deterministic / algorithmically determined game where given sufficient computational power to push beyond the event horizon, the outcome is known in advance and any opponent can be beaten or a stalemate can be drawn. Modernly, the computational power already exists and any player can be perfect.

Planes that have an automatic pilot, and have had a fully fledged automatic pilot for decades, implement what's called an "expert system", which means that the algorithm, depending on a series on inputs, takes decisions, recommends them to the operator, and the operator can reject or approve them. Examples include:

- "tesla cars", along the lines of "one more change to the algorithm and the car will drive perfectly, we swear!",

- "planes",

- "nuclear power plants"

Expert systems are mostly in place to solve very difficult tasks, but they explicitly rely on a human operator to make the final call because it is arguably anti-ethical for a robot to take the final decision given the possible large-scale impact of the decision.

In a Political Context

The statement "A.I. will put people out of jobs" has been true for about a century, iff. "A.I." refers to algorithms like menial jobs, for instance, carrying stuff around, issuing tickets, selling Pepsi, etc. However, remember that "A.I." frequently only refers to algorithms when it is mentioned in the media, never to expert systems and/or "A.I.".

The Big Boon

One thing that was irrevocably provided by "A.I." and, in fact, "machine learning" is the ability to generate work, whether scientifically or otherwise. For example, there is a whole class of "applying machine learning to subject X"-scientific publications that do nothing more than use an algorithm in order to check whether it is able to solve a problem, and if not, how much of the problem it can solve. There is an indefinite amount of publications that you can just bulk-generate by using "machine learning" and a hype or quirky application thereof and cross-domain, to add to the coolness factor of the publication.

Another "boon" or applicability is within art where precise and pre-determined outcomes are not necessary. It feels more like a throw of dice.

The Need for A.I. Regulations

... is a solution to a problem that does not yet exist.

Conspiratorially speaking, it might just be a way for techno-oligarchs to pretend that "A.I. must be slowed down" in order to justify that they have not yet attained the "God"-levels of coolness they were promising and hitting the halting problem very early on after it has been explained to them that none of the "transhumance" mumbo-jumbo they've been using to receive funds will ever come to be! In other words, a way to delay the popping of the "A.I." bubble where investors will figure out that "A.I." is not the second coming of Christ, as the techno-oligarchs portray it to be, and will be desperate to recuperate their money. As for any market, buy early but also be sure to sell early-enough before it becomes too mundane!

On the other hand, perhaps Tesla "autopilot" cars should be regulated and applying whatever pre-trained program in Silicon Valley to a ... less democratic place with unmarked roads, wild bears roaming through the streets, outright misleading road signs (points left to exit, leads into a mud wall), the occasional window-washing rapist, roadblocks that should not be there, would be a very funny experience but just for those that stand outside the car and at a safe distance.

More than likely, yet completely unrelated to "A.I.", due to A.I. arriving much later than expert systems or algorithms, there were some U.N. international treaties in place to prevent the usage of autonomous robots in warfare due to ethical concerns, akin to those that made people design "expert systems" instead of fully autonomous systems.

A clear modern-day exemplification thereof is the usage of CAPTCHA prompts by Cloudflare and Google that have driven a whole generation and more crazy with solving silly quizzes that for an informed observer are questionably only solvable by humans (in fact, there are whole sets of tools out there to bypass CAPTCHAs automatically with various techniques, ie: listening to the voice recording instead and interpreting the voice to read out the letters or numbers, using A.I.!). The losers in this case are, of course, the people that the owner of the website might not even know they are blocking, and the decision of the owner to use Cloudflare being purely economically-cheap but not enough to care or too trusting such that the owner starts to lose business without knowing why. Just like people that delegate responsibility to algorithms, very similar to users of Cloudflare that would have an alternative available, it is clear that they can afford the losses in order to not care. A very slow deflation of the bubble that is A.I., if you will.

Another concern that is uttered very frequently by politicians is the usage of chat bots ("A.I.") in order to provide customer support. However, the disgruntlement is mostly due to very vocal people detecting the usage of a chat bot and then resenting the service. In reality, "chat bots" for customer support are very closely related to automated answering machines, where, go figure, that probably more than half of the requests on behalf of customers can be solved automatically without even needing human assistance. While most people consider themselves "technologically apt", the reality is that most humans tend to overlook the obvious, such as plugging the machine into the wall socket, pressing the button to turn the machine on, and so forth, that all can be solved by a simple FAQ or using triage as per the answering machine to speed up more complex problems that customers might have. Unfortunately, while the chat bot is visible, the reality that you might have a very complex issue and are in a queue waiting for a bunch of people that did not read the manual, when, on the contrary, you are a long-term user, is not that apparent. A chat bot could touch on "related" topics that might make the user think and it might prevent a human operator having to intervene.

Machine learning, more precisely, is good at training an algorithm against a fixed-set of inputs such that any input that falls within some entropy range of similarity and variation, will be labeled similarly by the algorithm. This leads to an observation-frame confusion where the operator is tempted to constantly adjust or train the algorithm to match even more inputs that were not matched, up to the point of biasing the algorithm such that it starts to match completely unrelated things. Train the algorithm on too many lemons and it will match an orange, and what happens when it's not fruits but real people, what will be the plan when those people ask for justice for being mislabeled?

Another problem that "A.I." presents is traceability, namely, the ability to determine both the inputs or the algorithm that generated the outputs, as a consequence of the halting problem, which makes "A.I." even less useful. In many cases "here, I found the solution!" does not fly when it must be known how that solution has been found. In such case, anything before the output could just be replaced with a random shuffle, conditioned or not.

Supercomputing (Computational Capabilities)

One of the largest misunderstandings of computing, particularly derived from the crowd that believe that the universe is deterministic, is that every problem can be solved if you throw enough computational power at it.

This has lead to the creation of giant computer farms that are fed difficult problems and a lot of these farms churn on problems numerically and try to solve them. As an aside, in terms of computer security, some algorithm creators in the past hinged their cyphers on the idea that computers with the computational power that are now available will never exist, such that there are some merits to computational power, however, as the sentence implied the assumption was naive and modern cryptography is based on problems that cannot be reversed by an adversary (logarithmic reversibility - elliptic curve, quantum cryptography, etc) such that the mistake would not be made again.

Even though the former sections already demolished the idea by now, there is an additional mathematically provable observation that demolishes the idea that the problems of humanity hinge on the lack of computational power, there is still one very trivial observation that tends to escape even the most of famous of computing or A.I. pioneers making claims about computing and/or A.I., namely the matter of complexity of algorithms.

That is, it is mathematically provable that given an algorithm that is known to terminate but takes  time complexity will terminate much faster using computational power

time complexity will terminate much faster using computational power  (or

(or  clustered machines with partitioned tasks) than another algorithm that is known to terminate but takes

clustered machines with partitioned tasks) than another algorithm that is known to terminate but takes  with

with  computational power (or

computational power (or  clustered machines).

clustered machines).

A reduction of that formulation in simpler terms is the observation that bubblesort on a super-computer with  time complexity, given a sufficiently large dataset of numbers will finish much slower than a PC 368 (from the 90s) with an

time complexity, given a sufficiently large dataset of numbers will finish much slower than a PC 368 (from the 90s) with an  time complexity.

time complexity.

The former observation is absolutely trivial, or should be, to any programmer such that any discussion on how any problem can be solved with more power is just null and void even to non computer-scientists that are just programmers.

Software

There are various types of software that are ... associated with A.I. but most of these just use "machine learning" that lead to wild range of false positives and hence defeat the purpose in most cases:

- tensorflow is a machine learning library that can be used to train an "A.I." / algorithm to identify key-aspects within an image and attribute labels to the image depending on what as detected. If one uses the default per-training package, the results are obviously very bad. If one trains the algorithm using specific inputs then the "A.I." will be trained to identify those and only those inputs including their variations - which is a bit of a tautology when you think about it, tautology that will just fail when a key-characteristic is removed from the inputs. As a short example, you can train tensorflow to identify police cars based on the premise that police cars are white and blue but if the police turn up in an unmarked car, then the whole algorithm fails and hence why it should never be used for anything critical.

- OpenCV is a very old library that is just capable of doing very very many things with and to images

Frequently Asked Questions

- What is Artificial General Intelligence (AGI) / Artificial superintelligence (A.I. that matches or surpasses human cognitive capabilities across a wide range of cognitive tasks.)?

A marketing term like "information super highway" to describe something as plain as the Internet.

- Will A.I. ever be sentient?

We do not know what "sentience" means nor implies but we like to think about ourselves as "sentient". A Tamagocci from the 90s had people grow it like a pet when there was no such talk about A.I. Was the Tamgocci sentient?

- What is A.I.?

Initially, research into neural networks and machine learning. Now, it is an umbrella term from anything starting from a (linear abstract machine) algorithm and up to aliens while going through a lot of promises that will never materialize because there are fundamental problems that have not yet been solved. In terms of marketing and given that this is the politics section, A.I. is a bubble created by overhyped science, similar to the environmentalist boon in the 70s. It is a blessing for side-researchers that can churn out stacks of papers just by applying established algorithms in different contexts and reporting on their performance and hence expand their publication list (similar to physics and papers with hundreds of authors where it is unclear who-did-what).

- Is A.I. dangerous?

Very. Just like a car.

- Are any state and/or legalistic regulations need to prevent the use or misuse of A.I.?

A.I. simply does not have that large of an impact (even if something as trivial as an "algorithm" or "robot" is now also filed under A.I.). Just like for crypto-coins, there might be problems with fraud or scams using A.I., second-hand damages created by "malfunctioning" robots, "misinformation" if you believe that sort of thing yet the serious cases would be processed under scams and/or fraud, some security issues regarding existent software and hardware that base their security on weak assumptions and might be defeated by A.I. (CAPTCHAs, etc) and so on.

In any case, the doom-mongering is just for the effect - it's not nuclear radiation that would render the earth unusable.

- Why is everyone talking about A.I.?

Because the crypto-coin talk fell out of fashion. More than likely there are large interested parties that would like to keep the bubble growing. Even for scientists it is a way to justify their work and to produce large numbers of publications with very little effort.

- What sort of problems does supercomputing solve?

Large scale problems where millions of datapoints have to be tracked have large computing needs. However, it is rarely the case that supercomputing solve any fundamental problem. The discovery of he Higgs boson at C.E.R.N. was a practical experiment to practically demonstrate / reveal in reality the existence of a theoretical particle yet the existence of the particle had already been proven theoretically... by Higgs in 1964.

- Will generative A.I. replace programmers?

The closest metaphor for A.I. that writes code is a painter that is looking at the computer screen of a programmer. The painter does not truly understand what they see on the computer screen in the sense that they can barely make any sense of the semantics of the code that the programmers writes. After staring at the programmer and watching them work for a while, the painter pulls out a canvas and "paints" some code on the canvas which may look very convincing because it might mimic what the programmers writes, especially given the good "photographic memory" of the painter but ultimately the code in the painting might not even compile or work.

Aside from the metaphor, "code generation" has been a big domain that got a lot of traction due to object-oriented programming that imposes some sort of structure that has to be generated even if the structure is not used partially. This includes large projects where people might be tasked to just create a bunch of files with just some "skeleton code", for the sake of templating objects or creating API stubs till they can be properly implemented by programmers. Similarly, "code generation" is also used for refactoring or refinement of code, which is typically a tiresome process where the very same procedure has to be applied for different code-blocks such that it does not require any "creative contribution" (or a programmer, perhaps one to check at the end).

To wrap this in a circle with the painter metaphor, up to now "code generation" relied on "static analysis" which was mainly a mathematical or analytical way to approach code and rendering follow-up code by understanding or making sense of the existing code. A.I. code generation on the other hand happens similar to a painter that does not understand the code at all, but given a painter that has seen so much code and what it looks like, the artist might even create a painting with some code that might actually compile.

The previous image was found on Google by performing an image search for "hacker". The image is an artist's rendition of what a hacker looks like and, more than likely, the artist that created this image, very much like an A.I. does not know too much about "code" in general such that the right-hand-side of the image contains a "beginner program" (in a "HelloWorld" class) in Java that prints out a message passed to a method, specifically, something very trivial and too basic to be part of an image that would represent a "hacker".

Short answer, no, not at all, it's about as valid as saying that Visual Studio will replace programmers because it already had some "A.I." that could render code automatically even before "A.I." became some mainstream hype.

The Folly of Virtual Private Networks (VPNs) and The Suppression of Real Anonymity

Virtual Private Networks (VPNs) were initially devised in order to establish a virtual network on top of a physical network between machines distributed over long distance or different network (for example, on top of multiple Internet Service Providers ISPs).

Typically, the topology for a VPN is a star-shaped network, with multiple clients connecting to one central computer that acts as a mediator, hub or connector between all the machines that connect to it. Given networking principles, it is possible for client machines to route their traffic through the central computer that all machines connect to, such that the Internet traffic will flow through the central computer.

Similarly, the connection between the individual machines / clients to the central computer / gateway, is typically encrypted, such that the traffic between the individual clients and the gateway is encrypted.

Here is a diagram of how a connection to the Internet, through a VPN gateway takes place:

and the diagram can be refactored in order to take into account encryption, which will be represented by bypassing the ISP (in terms of data visibility due to the traffic being encrypted):

The sketch scales for multiple numbers of clients that all connect to the VPN gateway, typically mask their data from the local ISP, and then route out through the gateway to the Internet.

A VPN does not provide anything additional concerning anonymity, but a VPN just moves the problem of identity from the local ISP to the central hub that all computers connect to. In that sense, a VPN will only, at best, hide the traffic from the local ISP but it will not anonymize the traffic in any shape or form. Here are some quick conclusions based on the former:

- a hub or VPN provider is able to observe the following about the clients connecting to the VPN provider:

- the websites they connect to (but not the activities they perform) (via DNS and/or IP),

- the times that the connecting clients connect to the VPN provider,

- will be able to correlate websites being visited with identifying information of the connecting clients (most VPN providers are commercial, so the connecting clients would have more than likely bought the service using a credit card as well as having provided identifyng information),

- the local ISP will only see encrypted traffic between a connecting client and the VPN provider, the ISP will know that the connecting client connects to the VPN provider but the ISP will not be able to see the traffic or what websites are browsed through the VPN provider

In many ways, connecting to a VPN provider, is like having another ISP, on top of the ISP that is already providing connectivity.

The Law

Companies that provide VPN access, all tend to have clauses in their EULA stating that they will collaborate with law enforcement to the fullest extent even in the event of a suspected legal issue. Typically this is based on a subpoena that juridically forces the VPN provider to release information to the investigators.

With that said, it should be obvious that a VPN will not protect an individual from law enforcement. There are very many VPN companies, and many of them being very large, with right-about every computer user suggesting a VPN "for privacy", such that the data they collect (in some cases, as a legal obligation), must be off the charts. In some ways, this can be seen as a failure of local governance in the country that regulates ISP so much that now their constituents are forced to export all their data (which is cyclically, illegal due to the G.D.P.R.) to other countries in order to avoid local governing policies. The users from a country will thereby avoid the local authorities, the very same ones that are paid to provide protection (via taxes), and even go as far as to trust a company in a remote jurisidiction that may or may not have the best interests in mind of the users.

The Real McCoy

A real anonymizing network is a network like tor or i2p, that is essentially just a collection of proxies (or, imagine multiple VPNs, in context) through which the user's connection is passed through in order to become less observable to gateways between a client and the destination website. So far, there are no systematic attacks on the tor and/or i2p network that would allow an attacker to observe a client such that a network like tor or i2p is theoretically secure.

Attacks on anonymizing networks do exist, yet all of them are possible in well-chosen scenarios that do not show up statistically (ie: attacks when the established tunnel through all the gateways is conveniently short) and so far there is no known deanonymizing attack on tor that would hinge on solely the tor network. Tracking users with website cookies is trivial, or WebRTC will divulge the real IP, and that will still work on anonymizing networks like tor or i2p, however those attacks do not attack the tor network, architecture or protocols yet leverage flaws in browsers or other case-by-case situations.

Even though attributed to Voltaire and written, in fact, by Kevin Alfred Strom, the quote "To learn who rules over you, simply find out who you are not allowed to criticize" is uncanny in context, given that tor and/or i2p networks that are designed to be anonymous are completely blocked by many websites as well as leading to "death by CAPTCHA" due to Cloudflare security flagging tor / i2p outnodes IP addresses as dangerous. All of this, does not seem too incidental and even institutions that deal with "human rights" sometimes block anonymizing networks from their website, sometimes due to "cargo-load security" ("hey Bob, why are we blocking this website?", "dunno man, the router is from China, we didn't have any troubles with it yet, why?").

Both tor and i2p have a traffic pattern similar to the following:

where the traffic is deliberately routed through a number of nodes within the tor / i2p network, in order to ensure that the client is well-behind all the nodes or gateways leading to the Internet.

The idea is that if one of the nodes, such as the exit node, is compromised, then the follow-up node, going backwards, would have to be compromised as well, and then the next one up, all the way back to the client. From a legal perspective if all the nodes / gateways between the client and the Internet have to be approached and made to divulge the traffic making it next to impossible to track down the client.

Even in terms of conspiracy, it should be a red flag to anyone that so many companies and even "hacker groups" try so hard to dismantle tor and/or i2p, when, in fact, they are true anonymizing technologies that do have privacy in mind. Congruently, the obnoxious pitch for VPNs, even from people that should know better, is excessively large, even if a VPN does not anonymize a user at all, yet exposes their identity to the company offering the VPN service along with all their traffic.

Cloudflare and Outsouring Security

This website is using Cloudflare too, so we're well on our way to become hypocritical politicians ourselves!

This website is using Cloudflare too, so we're well on our way to become hypocritical politicians ourselves!

Cloudflare started as "project honeypot", which was an endeavor to blacklist a bunch of IP addresses by gathering various automatic attacks in the wild and then to provide a blacklist of IP addresses to people that would subscribe to updates in order to block these IP addresses for other machines not connected to "project honeypot".

Cloudflare swallowed "project honeypot" and additionally became a DNS server (name resolution, IP to website address and back) providing free services to anyone that did not want to run their own DNS server. Arguably, the one and only commercial success could be attributed to Cloudflare hiding the IP address of the real machine across the Internet by making all DNS requests resolve to their own servers and then passing the connection behind Cloudflare to the destination machine.

It was a great achievement over regular TCP/IP that was never designed with privacy in mind, where, an IP address can be looked up in ARIN in order to trace a website to its ISP and sometimes even to its geographical location. Unfortunately, ARIN and the Internet Consortium is regrettably populated by, well, politicians, just like yourself, that are not technically also-economists and needing to generate revenue, such that the concept of "privacy" eludes them. To the point, tracing the IP address to an owner could lead in many cases to instances of burglary, S.W.A.T.-ing (ie: sending the police to a person on the false information that they are dealing drugs) and other harassment opportunities that have been observed in the past that affect businesses and private individuals such as celebrities. By proxying DNS, Cloudflare made it possible to hide the IP address of a website, hence the geographic locator and more than likely prevented loads of drama taking place over time.

Unfortunately, Cloudflare is still a commercial entity, such that they are bound to cooperate with law enforcement given an investigation, which means that they might be coerced juridically to hand over user data. Another troublesome issue is that Cloudflare heavily penalizes traffic from anonymizing networks such as tor and/or i2p, leading to funny scenettes such as "death by CAPTCHA" where a user is repeatedly prompted to solve puzzles with no end in sight, making other users even doubt whether Cloudflare's intentions are legitimate or they are a large data-mining operation themselves - otherwise, if they truly cared about privacy, surely a solution could have been found for users of anonymizing networks but all "solutions" so far have failed to materialize. In principle, Cloudflare's defense is that anonymizing networks are frequently used for "attacks", but in reality the most wide-spread attacks in the wild are along the lines of denial of service, and neither tor nor i2p are even able to sustain or "move" the traffic required in order to perform successful DDoS attacks (such attacks are more typically carried out using "clearnet" and compromised machines).

We would argue that Cloudflare damaged the Internet incidentally because the price of using Cloudflare has been just a signup away, such that every person that did not know better, nor cared to do their own security or hire an export, just used this amazing free service that promised to take away all their problems. More than often, not only did Cloudflare take away their problems, but also their customers, given that Cloudflare has practiced blanket-bans in the past on entire networks that they deemed to be "compromised". Interestingly, at Wizardry and Steamworks, we can recall three incidents when we were asked by users why we are blocking them, only to realize that it was Cloudflare banning their entire networks (more than often, these bans occur on IP blocks belonging to Chinese registrars).

With the former being said, Cloudflare can also be seen as a result of bad political governance or maybe even, a lack thereof, or maybe even the lacking knowledge, because running a DNS server on your own machine requires certain ports to be opened by ISPs, which ISPs do not typically open, claiming they are a security risk (well, they are not), as well as having the option to opt-out of ARIN / Internet Consortium WHOIS databases for businesses or individuals that do not wish to disclose their identity.

There is now even a gray-area where data protection laws are in conflict with ARIN / Internet Consortium regulations, with businesses feeding off this problem that seems well-known, unresolved and now profitable due to being in a shady area of the law. More precisely, ARIN /Internet Consortium require the owner of a domain to fill in the WHOIS information using actual real information; in other words, it is expected for the owner of a domain (ie: grimore.org) to fill in their full name, address and even their phone in the WHOIS database, a database that can then be queried by right about everyone on the Internet. At the same time, data privacy laws, such as the G.D.P.R. or the The California Consumer Privacy Act (C.C.P.A.) outlaw the requirement to provide such data in case the client refuses to offer the data, such that the laws are now in conflict. The resulting black-market business, is that registrars that sell domain names, also add a "paid option" to have "additional security", which involves in essentially acting as a proxy on behalf of the customer by filling in their own company data, or even, not offering any data at all to WHOIS (which makes them break the law themselves). Cloudflare would technically protect the user from all that, and even if the user adds their own personal data to the domain, Cloudflare would proxy the IP such that the real IP would not be revealed and it does so as a free service.

Over the years there has been growing skepticism on the truthfulness of Cloudflare's claims, in particular, related to privacy but also the observation of people that had to deal with real DDoS attacks when it was discovered that Cloudflare's "I'm under attack button" will provide some DDoS protection but that the protection will be turned off after a while in case the DDoS attack is too severe for them as well, thereby nullifying the pretense of security as well as offering an attack vector to attackers in order to be able to determine the IP address that a website has.

The privacy concerns on the other hand, related more to Cloudflare that "normalizes" a "man in the middle" MITM attack where traffic arriving from both sides, both client and server, under certain configurations, is decrypted by Cloudflare such that all the activity of a person browsing the customer's website is visible in plain-text to Cloudflare. This includes credentials when logging in to websites behind Cloudflare that Cloudflare has the ability to observe in plain-text if they so desire (even though, clearly, that is something they would deny doing). Just like VPNs, but even worse, given that a MITM could observe not only the websites that a client connects to but also the exact activity, credentials, chat, etc, the accumulation of private data on Cloudflare servers must be phenomenal and well, you could only trust them on good faith that they do not look at that data and/or delete it periodically ("hey Bob, you know we caught all these terrorists and monitored their traffic using this cool software...", "right", "well, we'd have to shut it down now", "right, because we won and the terrorists are defeated", "well yeah, but I'm thinking Bob, what if we just... leave it running to collect data?").

The Politics of the Cloud

Intentionally evasive, "the cloud" consists in a network of computers that are meant to provide a service. In political terms, the term "the cloud" intentionally erodes the concept of "private property" where the notion of some elusive network of computers that are able to jointly provide a service has the effect of making it ambiguous to whom the data belongs to as well as where that data is placed.

The political implications in terms of economics and logistics almost grant a barrier of occlusion to the content that is served by the cloud, because the lack of ownership and without knowing in which jurisdiction the data is to be found, it becomes very difficult to hold someone responsible.

In reality, "the cloud" just specifies the kind of technology that is used to deliver a service but hinging on the lack of technical proficiency of other people the barrier of occlusion works and sometimes even works as a double-blind between the owner of the data or service and the service provider itself.

After the spreading of the term "cloud", world events lead to the concept of "deplatforming" or "cancel culture" where the outrage has been that important political figures have been, well, more or less fired from their jobs or have had their own services barred from "cloud" or "cloud-like" platforms such as YouTube, Google in general, Twitter, etc. Interestingly enough, given that the member countries of the European Union always try to plagiarize or import scandals from the United States, when the concept was brought to the EU, it did not hold any water nor did it ruffle any feathers and that is mainly because the EU till runs on the older economic principles where a very clear distinction on who's-whose property is always made. For Europeans, it was more clear than to people in the United States, that say, a company like YouTube is a privately owned company, even if it belongs to share holders instead of just one person, such that any actions they take with their own provision of service cannot truly be contested unless some sort of litigation would be intentionally implied. Even legally speaking, dating since the inception of these "megalodon" companies, the terms of service (ToS) have always been very clear on the matter and have stated that the company reserves the right to cancel the service to any customer as they see fit and for whatever purpose such that it was never opaque what sort of rights a customer has and the limitations of those rights.

As with too much trust given to VPN services or too much trust granted to outsourcing security, the terms and conditions and the business model of the service provider has to always be consulted. Even though "cloud services" do benefit society in an altruistic sense, it is clear that given that they are registered businesses that must even be able to pay for their own consumption (employees, maintenance, hardware, utilities, etc) running on empathy alone will not allow them to continue running.

Governmental Outreach and The First Amendment

Even before the Twitter files incident, the Cambridge Analytica incident involving Facebook led to a situation where the United States senate had to petition Mark Zuckerberg, the owner of the Facebook platform, on suspicion of espionage via the Facebook platform. Although it was clear from the start that Facebook was used as facility for the espionage to take place without too much blame attributable to Facebook itself, the court hearing involving Mr. Zuckerberg shows a worrying intent of the US senate to gain a foothold into the Facebook company by applying pressure on Mr. Zuckerberg to cooperate with them. However, in theory, any intervention of the government into a privately owned business, especially if not a temporary arrangement, is more or less a violation of the fist amendment and a way to fence-in free-speech laws. Many times during the interview, Mr. Zuckerberg agrees to jointly work with the US senate which is a de-facto intervention into private sector affairs - in fact, going the whole way, it is surprising that Mr. Zuckerberg did not ask the US government for subsidies because as far as the Facebook company goes, it belongs entirely to Mr. Zuckerberg and shareholders and not classified as a "public utility". In case the Facebook company would be reclassified as a "public utility" such that the government would have the legal leverage to intervene and dictate policy, then the Facebook company should also receive state subventions and payments from the US government. The whole incident can be set next to Jack Ma and the experience with the Alibaba Group company that he created only to give it up just when it was made profitable, which seems to share some similitude with the Facebook event but in a jurisdiction where the emphasis on free speech is not that emphasized.

Of course, after the acquisition of the Twitter company by Mr. Elon Musk, it was determined that Twitter had collaborated with the Federal Bureau of Investigations (FBI) in order to push or pull various narratives and in connection to the 45th president, Mr. Donald Trump such that it was made clear that governmental intervention in private affairs does exist and is even pipelined.

With that said, and pertaining to the topic of "the cloud", the important takeaway lesson here is that online services, even the minor ones that are not megalodeon businesses, forums and other spaces are not all held under the same sort of scrutiny and the "the cloud" or "the Internet" only grants the same level of protection as the customer or Internet surfer takes. Historically, there have been many services that require private details to sign up, and the fact that such services run "in the cloud" should convey a degree of suspicion rather than that of more trust.

Frequently Asked Questions

- Is privacy dead?

Obviously not, but quite on the contrary, legally speaking citizens have more freedoms and in particular more rights and claimable rights pertaining to privacy, all of these being as a trivial consequence of the European G.D.P.R. the privacy rights in California or others that fastly ensued. The phrase "privacy is dead" is an euphemism albeit a myth that only reflects the stance of people working in the over-bloated "security industry" that have overextended their reach and are now trying to somehow normalize mass-surveillance and espionage. In other words, the US government would not utter "privacy is dead" but rather someone working for "Cambridge Analytica".

- What is "the cloud"?

Technically it means a network of computers that are interconnected for the purpose of providing a service (in historically accurate terms, "cloud" used to actually refer only to storage space, as in, hard-drive space provided by online services but in the era where "applications" take precedence over hardware, the notion "cloud" extends to services rather than hard storage).

- Is putting data in "the cloud" safe?

All major data broker companies, even Apple iCloud, Dropbox and other companies have been hacked in the past decade in short time-span, indicating that that even iff. one would assume that Apple would not collaborate with espionage agencies, then the data would still not be safe because it would have been leaked. Otherwise, many of these companies have been under a lot of pressure to implement backdoors for the espionage industry in order for prosecutors to be able to take shortcuts by legalizing means of gathering evidence that are still illicit (ie: those that breach of the 5th amendment, see the case study on United States vs. Apple MacPro Computer).

In short, no, and not at all; it is even humanely pretentious to assume that nobody would be curious to see what some other person has stored away. Typically, technically savvy people that use cloud services always encrypt the data that ends up on the server (either by using loopback mounted encrypted drives, or similar) transparently. The same sort of judgement should apply as for espionage: the sensitivity of the documents being stored should match the scrutiny of the storage medium being used (or better).

- Are there any state-level concerns about cloud services?

Not unless you want to run a tyranny.

Perhaps there is some tertiary lesson where people should be aware of the extents and bounds of private and common goods in order to be less trustworthy of stuff that is offered "freely".

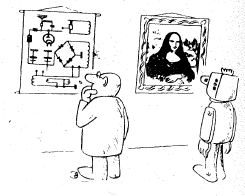

The Misunderstandings with Art Generated by Artificial Intelligence (AI) Algorithms

(author unknown, old illustration cca. 1980 from an electronics flyer)

A lot of criticism is extended to contemporary art in general due to the fact that computers have the capability to generate images on-demand based on previously trained models by supplying the algorithm with a phrase or keywords of what the resulting generated image should depict. Extreme criticism even considers that art, as a whole, is now futile and that AI-generated art will replace artists due to the rendered images that AI can create.

A machine learning model might be trained on a style of music to generate, or a style of painting, and requested to generate a new piece of art, yet in doing so, all the components that are a characteristic of the original piece of art are present. The fallacy of AI "generating" or "creating" can be reduced to telling a computer to use a random number generation algorithm to generate sequences of numbers, and then considering that the number sequences created are "unique".

Here are some related examples where the technical difficulty required to accomplish a task has been reduced considerably yet it made the domain flourish:

- The Unity framework has been a framework that many independent game developers have been using to develop games. Many of these games are highly acclaimed, with the game developers themselves being self-taught. The games are are often not developed from scratch, with many of the components being bought or existing as demonstrations, however that does not prevent the games from having a great reception due to the uniqueness of the ideas expressed by the author.

- Manga or Anime, are very abstract compared to Renaissance paintings, where every detail is typically exposed, however Anime has become extremely popular and arguably the attribution is to the ideas expressed by the author and not necessarily to the drawings that are similar between various artists.

- The image above, seems interesting and unique, but note that the person using the website to generate the AI has provided a very, very, very precise description (encased by the rectangle on the right) of what they would like to see such that it would be wrong to attribute the art of work to the engine generating the image. Similarly, some of our pages use a certain template format that uses the title "Realization" in order to describe the process of applying some theory and creating the product in practice.

- Newspapers hire artists to express ideas and irrespective of what the artist might agree with politically, the artwork is still attributed to the artist.

Imagine waking up one day and thinking up a cool movie plot or a cool or funny image but also lacking the "technical skills" to draw or render the images, let alone be an influential person at Hollywood to be able to organize the making of a full-time movie. With AI and generative AI, it is now possible to create anything from images to a full time movie because it decouples the rendition from the "idea", with the "idea" being mostly the purest form of creation. Sometimes, for example, in computing and programming very experienced programmers would claim that there is a certain "art" to writing code or programmers that write code a little differently due to their experience at no penalty or even better performance. In the programming case, the actual "realization" is considered to be the art, just like some particular painter might have a new technique, just like the historical transitions between painting styles (pointillism, impressionism, abstract art, etc.). However, many times and as a particularity of modern and post-modern art, the idea becomes the centerpiece rather than "the skill required to represent the image or the idea in an artist's head". Ultimately, even in computer engineering, let's say, in the domain of computer games and gaming, the actual models on the screen are usually either from an existing library or generated using the computer (frequently with assistance from already-existing generators, like "texture generators") such that it would be unfair to say that generated AI art is not original compared to 3D modelling. For this reason, for example, Wizardry and Steamworks have their own AI art subsection, named "AI assisted" because part of the artwork was created with AI whilst the rest was created manually.

Lots of people have creative impulses and artistic affinities, providing AI and generative AI to them is equivalent to offering children pens to write. It would be a shame to lose or pass up on some possible artworks just because the people coming up with the ideas do not have the physical capacity or technical knowledge to render the artworks in their imagination. Generally speaking, people that do not hold too much regard for AI or artwork assisted by computers are people of an older fabric back when both realization and the idea was considered together but ultimately that is a matter of exigence. In particular, a good idea does not necessarily also imply that hardship must be expended in order to accomplish it such that even if the artist did not sweat in laying out their imagination on a sheet of canvas, the idea that they would render with AI is still a valid creation.

Lastly, it must be said that for generations after and including Millennials, complaints about generative AI seem ironic considering that Millennials and "older Gen X" were the first to experience the phenomenon that was "electronic music" with most of that music being effectively computer-generated music. The Commodore Amiga, for example, was extremely famous to the point of still being used today to "generate music" - in particular the program "Protracker" and its many many variations and hacks was used because it can generate different tunes and then combine them together in a melody which became the ultimate DJ tool. There are entire concerts or mixtapes, ranging from crack intros to full symphonies written in Protracker on the Amiga even on YouTube to this date and all of it is generated by the computer with a human directing it.

Arguments against The Slop

One phase coined is the "AI slop" in the sense that since generative AI became available, easily accessible and with little required skill to a wide audience, people have been generating a lot of "poor quality" or "tasteless" creations that have been pushed everywhere, to social media, forums or used as real paintings.

The argument is moot because the criticism is ambivalent with, say, the spread of "computers" to the masses, or having the Bible written in the native tongue so everyone can understand it. It's like having a pottery class with children where you know fully well that there will be at least two of them that will sculpt a penis with balls just to be annoying but ultimately that is the process of learning and it is a long term investment because one of those children might create something unique or particular.

The Copyright Problem

One other problem that is brought up more than often is that generative AI generates images or videos by literally scanning already-existing art. In other words, it will not know how to draw a cat, until it scan though a bunch of images containing cats that already exist and even then, the AI will only be able to draw cats "like" the cats that it scanned though.

With that being said, a matter of "obfuscated plagiarism" comes up where people would claim that an AI generated work is just a ripoff off some already-existing things that the AI has scanned though previously. The argument is moot as well because painters or artists do not necessarily create (draw, sculpt, etc) things that never existed before - clearly, not all artists are Sci-Fi artists that will end up also additionally creating, say, spaceships that bear no similarity to anything that has ever created before.

An old Aesop-ian tale goes along the lines of a homeless person eating bread outside a steak house and the restaurant owner comes out and claims that the beggar would have to pay for the smell that the homeless person is inhaling while eating the bread. Aesop comes along, listens to the claims of both parties and asks someone for a coin. He tosses the coin in the air and the coin falls to the floor, making the usual sound that a falling coin makes. Aesop turns to the restaurant owner and claims that if the homeless person used the smell to enhance his taste of the bread, then the homeless just paid for the smell with the sound of the coin.

Coming back, art is a very complex domain, with very, very many branches and up to claiming that everything that you create is, in fact, art. There are no definite rules - the closest that it gets to "rules" is the Berne convention on copyright claiming that an artwork must be complex enough to carry a copyright, however that rule is "political" or "economic" rule, not a rule of artistry. One art-style, for example, that is valid in itself is a "collage", which means a collection of ideas more or less seen elsewhere. Trivially, it's tough to not see the innuendos in existing movies that allude to say, the Red Army in Star Wars, reference to Jesus and the last supper or other "tributes" or outright shared art between movies such that setting the bar higher for AI and claiming that it should create something the world has never seen before is an unfair requirement relative to "human art". Historically-speaking, even painters had eras when they shared the same art-style like Monet and Van Gogh!

The Politics of Game Difficulty Settings

Even though age-old trends have turned game difficulty settings into a vertical and competitive affair, the reality of the matter is that for most games the actual difficulty of the game scales in terms of numerics and not really in terms of any other "difficulty". For people new to games in general, or for early-day adopters of the arcade this might be a little strange, because games have always been presented as competitive. However, the reality is that modern games have scaled in several dimensions by spanning multiple game archetypes (FPS, platformed, etc.) where the actual challenge does not necessarily consist in a numerical challenge where the best player just racks up arcade points. For example, a action-RPG game, a game archetype that involves both critical thinking, for the puzzles, and some reaction speed, for the fights, by cranking the difficulty setting up the game will only scale in terms of fights and very rarely to almost never in terms of difficulty of the puzzles (this is due to the puzzles being hard-coded such that the game developers can ensure there is a solution and the players do not end up stuck).

Another newer trend is the apparition of players that seek to find save games of games that have already been completed and this pattern has come under some political scrutiny. Leisure aside, there are many reasons for looking for saved states within a game that has almost been entirely or partially completed by another player, and here are some examples:

- remembering a scene within a game that has already been played, such that playing the game again just to get to that scene is overkill,

- multiplayer and LAN games where players do not care too much about playing the actual story-line, but would rather have fun in a LAN party,

- etc

Perhaps related to the first paragraph, and in terms of game design, most of the time a good measure of the "original feel"™ of a game is to play it on its default difficulty, which most of the times is tabled as "Normal". This assumes that the game designer considered that the actual feel of a game as it should be perceived by the player lies within the "Normal" difficulty setting, and under that assumption, going either lower or higher might have the effect of disrupting some of the design ideas of the game (which boss? but that one was easy!). Sometimes, game designers read too much into game politics, or end up swayed by people, often times, even less versed than them, and very often get the bad idea to remove the "Normal" difficulty setting.

Naturally, listening to the audience is a good thing, or used to be a good thing, but that was right about very active interest groups or online mobs, similar to lobbies were invented, that invaded the computer game world, either due to its potential in generating returns or due to espionage and gathering data on players and finally ended up pulling strings with the community, but not really for the betterment of the game or the community at large, yet for their own particular focused interest.

Regardless of that, it is important to realize that computer games are somewhat more complex and nuanced than other arenas and that reducing a game to just accumulating points just does not apply across the board for all computer games.

High Energy, Low Efficiency vs. Low Energy, High Efficiency

In terms of computing, "more power" does not necessarily lead to better performance because better performance does not necessarily scale with computing power. As an example, given two well-chosen computers, one computer much more powerful than the other computer and with both computers given a problem to solve, depending on how the computers solve the problem, the much less powerful computer might outperform the computer that is much more powerful. Very trivial for a computer scientist, but, for example, a bubble sort with a  time complexity could be running on the very powerful computer and an insertion sort or quick-sort could be running on the less powerful computer with a

time complexity could be running on the very powerful computer and an insertion sort or quick-sort could be running on the less powerful computer with a  time complexity such that after a given amount of numbers the weak computer running the more efficient algorithm will always outperform the very powerful computer. This relies on the fact that bubble sort has an asymptotic complexity where the next step will require a relative-quadratic amount of computational power more to complete whereas the more efficient and logarithmic algorithm will, in fact, require much, much less power to complete the next step.

time complexity such that after a given amount of numbers the weak computer running the more efficient algorithm will always outperform the very powerful computer. This relies on the fact that bubble sort has an asymptotic complexity where the next step will require a relative-quadratic amount of computational power more to complete whereas the more efficient and logarithmic algorithm will, in fact, require much, much less power to complete the next step.

With that being said, current technology, after the Internet boon, has mostly evolved along the "high efficiency and low power" principle where new algorithms have been derived in order to solve complex problems efficiently. On the other hand, paradigms like machine learning or AI are solutions that require a lot of power and have a very low efficiency where any work accomplished can be seen more or less like a brute-force based on previous feedback (linear regression) that manages to solve problems that are not easy to solve. The problem is that "high efficiency and lower power requirement" solutions are very difficult to discover because they require fundamental research relying on matters of natural sciences (like mathematics) whereas "low efficiency and high power requirement" are accessible to anyone and "only" require vast amounts of power.

Obtaining one or the other solution relies on different economic patterns where "high efficiency and low power requirement" solutions requiring an investment in people or sciences in general whereas "low efficiency and high power requirement" solutions require an investment in natural resources to provide the necessary power requirements.

The distinction can even be observed in the game industry when people complain about some game claiming that the game is "poorly optimized", or, in other words, the game does not provide any quality that would justify the high power requirements such that the usual conclusion is that the game was mass-produced by programmers that are not necessarily experienced in their domain or the production costs were reduced with the tradeoff of bumping the game requirements at the cost of foregoing any optimizations or quality checks. More than often, companies that develop hardware and in particular graphics cards, use software obsolescence to force people to upgrade their hardware given that for every new game there is less an less innovation in terms of the game engine itself (game engines such as Unity being already made and only requiring "artwork" to be added) such that many games end up having overly high requirements that could just be played on an older graphics cards, had the proper performance scaling been implemented.

Website Consent Forms, Answering Machines and Disregarding the Ethos of Data Privacy Laws

With the inception of generalized data-privacy laws, both in the European Union as the "General Data Protection Regulation" (G.D.P.R.) and its counterparts in the United States such as the "California Data Privacy Laws", it is interesting to notice how these laws, and even the whole incentive of these data privacy laws seem to be somehow bypassed summarily.

Whilst many websites adapted by adding data-privacy banners, popups and even went as far as to allegedly allow the configuration of data-collection on their site, it is the case that none of these websites have any "direct" option to opt out of the data collection straight from the main data-privacy prompt. Counter-intuitively it seems more like a user is expected to at least partially consent to data collection and only thereafter raise the issue of data-privacy and request the deletion of their data. The vanity exhibited by these websites in terms of expectations for their users to actually read through their whole policy and to "configure their data preference" for a website amongst billions of websites, when most websites have fractional bounce rates (time spent on a website by random Internet surfer), gave rise to browser add-ons like "Cookie Cutter GDPR Auto-Deny" or consent-o-matic that contain a set of rules that automate the completion of privacy forms, typically following the pattern of least-consent.

Even though the privacy laws in effect seem sound, the whole gist of the data-privacy laws, namely empowering the end-user to be able to control the spread of their own data, seems completely disregarded when for all of these websites the user absolutely must consent at least partially and there is no easy way to not consent at all to any data collection. In that sense, consenting to data privacy banners has been turned into an all-or-nothing deal where the user is expected to at least agree to some subset of data collection, or the user is completely barred from the website turning the ordeal into a classic legistic suicide pact. From a technical standpoint, it is of course possible to create a website that does not collect any data, and, for the most part, data consent forms have been classically justified though the use of "browser cookies", however, there is no implicit equivalence between cookies as a means of storing website parameters and intrinsically personal and identifiable data. In other words, data stored in cookies does not necessarily have to contain identifiable data, with cookies even in the classic sense being used as "website settings", like the state of a menu that folds and unfolds.

Laving the Internet aside, most of the same tone and pattern is to be found in the implementation of "answering" machines for companies that, when a customer rings up the company, they are fed into an answering machine loop where most of the time the customer can request to speak to a representative of the company. When the customer chooses the option to speak to a representative of the company, most answering machines inform the user that the phone call can be recorded, justify that by claiming the phone call recording might be used for training of their employees and make it a case that by continuing the conversation, being put through to a representative, instead of hanging up the phone, the customer is deemed to have had implicitly consented to being recorded.

The former also happens when the onus of the phone call is reversed and an employee of the company calls up a customer where, just like a court jester announcing the accomplishments and terms of a visiting royalty, for some companies when an employee rings a customer, the customer receives a phone call and is greeted by a pre-recorded voice rather than a human that plays back a disclaimer, claiming amongst other terms that if the customer does not hang up, then the company considers that the customer consented to being recorded, after which, if the customer holds the line, the customer only then is finally put through to speak with the employee of the company calling them. One of the functions of a court jester, aside from entertaining royalty, was to act as some sort of crier by announcing the presence of other royalty when they visited the court and that often consisted in announcing the reputation of the visiting royalty, their spoils and also their terms.

However, at the very least, these answering or customer triage machines, do not allow disagreeing with being recorded and the whole ordeal becomes "do-or-die" where, in case the customer disagrees with being recorded, they are expected to hang up the phone and pass up on the entire service. Clearly, an option could have been added along the lines of this sequence:

- please press 0 to be put through to customer support

- 0

- we would like to record phone calls for the purpose of [...], if you agree for this call to be recorded, please press 1, otherwise press 2

- 1 get in touch with support, while possibly being recorded,

- 2 get in touch with support without being recorded

and then both options would put the customer through to an employee but with the distinction that if they pressed "2" then the company would not record the conversation. This is so simple that it would make any rational person scratch their head why not all answering machines for companies implement this issue this way by providing the possibility of opting out.

Business-wise, it is dubious that so many companies that purchased answering machines to sort customers according to the support they need would be all that hell-bent on recording the phone call rather than selling their product. It begs the question whether companies at large really feel that way and whether data-collection is just more important to them than selling products or whether this "answering machine" pattern is just normalized due to ulterior versions just passing down the same behavior without questioning it. It makes the world seem dystopian although it might just be a case that these "answering machine services" are just some bulk product that any generic company buys, but due to the generic handling of phone call recordings in a "do-or-die" fashion, the company gets the aspect from customers as some spy agency. And, while scaling up the same default answering machine template repeated for a large number of companies, it becomes some sort of universal truth that fuel the collective paranoia of people at large that are lead to believe that all these companies spy on them. It might just be that the combination of crassness, bad technology with low feedback, carelessness or apathy just lead to this situation where all companies seemingly do not want to proceed unless they can record the phone call with their customers, which might seem plausible for smaller companies, but seems hardly likely for large companies and corporations that make a lot of money off selling their products or services just fine.

On the other hand, clearly, the legality of summary consent to having the phone call recorded is in doubt and carries the same issues that the case of shrink-wrap licenses carry where uninformed blanket-consent would be conveyed by the customer to the company without the user being made well-ware about the consequences, rights and limitations of being recorded. Just like Internet websites that narcissistically display "consent forms", advertising just how compliant they are with data collection, yet fail to provide the simple fall-through option to not consent to any sort of data-collection, the very same applies to customer support answering machines that show off their compliance but the whole company service seems to be contingent on whether the customer can be recorded or not, both of these technologies are in violation of all privacy laws as well as "hitting closer to home" in terms of contractual law by taking shrink-wrap licensing agreements for granted even though their legality is still disputed. Given the displayed behaviors one can either assume that all companies have turned into spy agencies over night such that data collection is more precious to them than whatever product or service they have to offer or whether this behavior is a mishap that is inherited due to the misunderstanding of the law by the people that implemented the technology (programming website consent forms, or programming the answering machines). Ironically, data-privacy laws made in Europe actually foresee the possible crass interpretation of GDPR and within the text of the GDPR, the clauses specifically state that consenting, not consenting as well as any other request and response should be made as easy and simple as possible and should not be a difficult task either in terms of work or technical expertise, which does not seem to materialize in navigating complex partial-consent forms on many websites with a labyrinthy maze of options that a website visitor would not even know what they do. Even in terms of technicalities, namely, basic principles of computer engineering (specifically UI design), not having a fall-through "leave me alone"-button violates the principle of least surprise where a computer user would expect to click "Cancel" or the "X" button or whatever other button to move the consent form out of the way however, the only way to quickly get rid of the consent form is to wholesale agree to all data processing, which seems less-than-incidental when the default should be no consent at all (ie: abstaining is not consenting, or even logically, the absence of a statement can imply anything but not something specifically)!

If one were to take the official statements for granted and follow the displayed predicament logically, one would come to the conclusion that, for most companies, their imperative to train employees greatly outweighs their imperative to sell their products or services, which sounds very stupid from a business perspective making the companies look more like schools rather than businesses. It is imaginable however that most companies do not bother with a technical department that would derive their own in-house solutions, to both websites or phone call answering machines, but that even large companies would outsource the problem to other companies that provide websites and answering machine solutions. The effect is akin to the problems of outsourcing security to black-box companies like Cloudflare and then having to deal with the inherited issues of the company that is being outsourced to, like incompetence, resource limitations, misunderstanding of the laws in effect, etc., more than often, for practical purposes, leading to the loss of business rather than a few ounces of some more extra security.

Messing with Domain Name Resolution Servers

One of the patterns that repeats consistently is the change of domain name servers by system administrators that have various political leanings such that they prefer one domain name server over the other such that they might change the default domain name server pushed by the ISP as part of the IP registration to some other domain server. Messing with the domain name resolution server is an element contained within the set of arcane "black magic" that hackers adopted that rather required "belief" or "a belief" system, for example, "the CIA is out to get us all!", rather than being a change that would result in more security or better performance.

Before explaining the politics behind the choices and also why the choice is often arbitrary, let's show a refresher on how domain resolution works.