Table of Contents

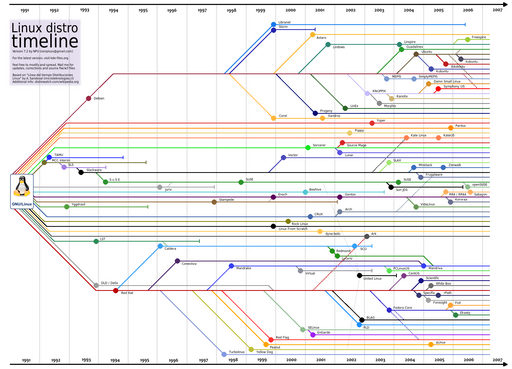

Distribution Timeline

Initd Skeleton Script

#!/bin/bash case "$1" in start) ;; stop) PID=`ps -ax | grep '[D]AEMON' | awk '{print $1}'` ;; *) echo "Usage: $0 {start|stop}" exit 1 ;; esac exit 0

where DAEMON is the name of the daemon the script is supposed to manage.

Basic Firewall

#!/bin/sh LOCAL_IF="eth1" NET_IF="eth0" iptables -F iptables -t nat -F iptables -X iptables -P INPUT DROP # Accept local network iptables -A INPUT -i $LOCAL_IF -j ACCEPT # and loopback. iptables -A INPUT -i lo -j ACCEPT # accept established, related iptables -A INPUT -m state --state ESTABLISHED,RELATED -j ACCEPT # masquerade iptables -t nat -A POSTROUTING -o $NET_IF -j MASQUERADE # ip-forwarding echo "1" >/proc/sys/net/ipv4/ip_forward

Stop Udev from Renaming Interfaces

On Debian-like Linux systems, including Ubuntu, Udev by default keeps track of the MAC address of network interfaces. If you happen to replace a network card, the operating system increments the interface number instead of reporting just the cards that it finds in the computer at that time. To stop this behavior, the following Udev ruleset can be eliminated:

echo "" > /etc/udev/rules.d/70-persistent-net.rules

After a reboot, Udev will stop renaming the interfaces (as it should have done from the start).

A different way to stop Linux from changing the interface names is to append:

net.ifnames=0

to the kernel command line (for grub, by editing /etc/default/grub and adding it to GRUB_CMDLINE_LINUX_DEFAULT).

Crontab Diagram

* * * * * command to execute | | | | | | | | | +-- day of week (0-7) (Sunday=0 or 7) | | | +----- month (1-12) | | +-------- day of month (1-31) | +----------- hour (0-23) +-------------- minute (0-59)

Get IP of Interface

The following command will return the IP address of the interface eth0:

/sbin/ifconfig eth0 | grep "inet addr" | awk -F: '{print $2}' | awk '{print $1}'

Routing Packets Out of the Same Interface

A common problem on linux is that packets coming in from an interface do not necessarily get a reply from a server out of the same interface that they came in from. In order to fix this, we have to set-up a few routing tables by editing /etc/iproute2/rt_tables and adding, for example, two tables:

100 table_a 101 table_b

then, we can route the packets out of the same interface that they came in from using:

ip route add default via $GATEWAY_A dev $INTERFACE_A src $IP_A table table_a ip rule add from $IP_A table table_b

where:

$GATEWAY_Ais the gateway IP for an interface.$INTERFACE_Ais the interface that the packets come in from.$IP_Ais the IP address assigned to the interface.

Prelink

To prelink binaries, using the prelink tool:

prelink -amR

To restore:

prelink -au

Enable Directory Indexing

tune2fs -O dir_index /dev/sda2

Where /dev/sda2 contains an Ext3 filesystem.

Get Top 10 CPU Consuming Processes

ps -eo pcpu,pid,user,args | sort -k 1 -r | head -10

Recover Linux Password

Made by Blagovest ILIEV and adapted to GIF animation.

Recompile Custom Kernel for Debian

After downloading the source, applying the necessary patches, issue:

make menuconfig

and configure the kernel. After that issue:

make-kpkg --initrd kernel_image

to make a .deb package which will be placed one level up from the kernel source directory.

Note that building an initrd image is essential because it contains the necessary drivers to bootstrap the boot system. If you recompile manually, the old way, Debian will not boot.

Tripwire Regenerate Configuration Files

After modifying the database configuration at /etc/tripwire/twpol.txt, the following script can be used to regenerate the database:

#!/bin/sh -e twadmin -m P -S site.key twpol.txt twadmin -m F -S site.key twcfg.txt tripwire -m i

Create UDEV Symlink

For example to link any /dev/grsec device to /dev/grsec2, add a file at /etc/udev/rules.d/60-grsec-compatiblity.rules with the following contents:

KERNEL=="grsec*", SYMLINK+="grsec2"

Inherit Group Ownership

Suppose you have a parent directory upper, and that the directory upper is group-owned by a group called maintain.

You want that all new directories and files under that parent directory upper, regardless by whom they are created (ie: root) to be group-owned by maintain.

This can be accomplished by setting the set-guid flag on the parent directory upper:

chmod g+s upper

Guess Module Configuration for Compiling Kernels

localmodconfig can be used to auto-detect the necessary modules for the kernel.

make localmodconfig

Create Bootable USB

For a disk with the following partition layout:

first install syslinux and then issue:

mkdosfs -F32 /dev/sdc1

to format /dev/sdc1 to MS-DOS.

Now copy the MBR file to the drive:

dd if=/usr/share/syslinux/mbr.bin of=/dev/sdc

Finally, install syslinux:

syslinux /dev/sdc1

Next step is to make the disk bootable with fdisk (run fdisk /dev/sdc and press a to toggle the bootable flag).

Get Page Size

getconf PAGE_SIZE

Measuring Performance

This can be accomplished with:

dstat -t -c 5 500

where t indicates time-based output and c stands for CPU.

The output is:

----system---- ----total-cpu-usage----

time |usr sys idl wai hiq siq

11-02 18:33:24| 3 1 95 0 0 0

11-02 18:33:29| 14 3 83 0 0 0

Other options are also available:

| Flag | Meaning |

|---|---|

c | CPU |

d | disk (read, write) |

g | page stats (in, out) |

i | interrupts |

l | load (1min, 5min, 15min) |

m | memory (used, buffers, cache, free) |

n | network (receive, send) |

p | process stats (runnable, uninterruptible, new) |

r | I/O request stats (read, write) |

s | swap stats (used, free) |

y | system stats (interrupts, context switches) |

aio | asynchronous I/O |

fs | filesystem (open files, inodes) |

ipc | IPC stats (queues, semaphores, shared memory) |

lock | file locks (posix, flock, read, write) |

raw | raw sockets |

socket | sockets (total, tcp, udp, raw, ip-fragments) |

tcp | tcp stats (listen, established, syn, time_wait, close) |

udp | udp stats (listen, active) |

unix | unix stats (datagram, stream, listen, active) |

vm | vm stats (hard pagefaults, soft pagefaults, allocated, free) |

Renumber Partitions

To renumber partitions we first dump the table using sfdisk:

sfdisk -d /dev/sda > sda.table

then, we edit sda.table to edit the partitions:

# partition table of /dev/sda unit: sectors /dev/sda1 : start= 2048, size= 4194304, Id=82 /dev/sda2 : start= 0, size= 0, Id= 0 /dev/sda3 : start= 4196352, size= 47747546, Id=83, bootable

In this case, we will delete the line starting with /dev/sda2 and rename /dev/sda3 to /dev/sda2:

# partition table of /dev/sda unit: sectors /dev/sda1 : start= 2048, size= 4194304, Id=82 /dev/sda2 : start= 4196352, size= 47747546, Id=83, bootable

Next, we restore the modified table:

sfdisk /dev/sda < sda.table

Show Socket State Counters

netstat -an | awk '/^tcp/ {A[$(NF)]++} END {for (I in A) {printf "%5d %s\n", A[I], I}}'

Scrolling Virtual Terminal

To scroll the virtual terminal up and down use the keys Shift+Page↑ and Shift+Page↓. In case you are using a Mac keyboard without Page↑ or Page↓, then the keys Shift+Fn+↑ and Shift+Fn+↓ should achieve the scrolling.

Set Date and Time

date can be used to set the system clock, however hwclock has to also be used to set the hardware clock. First we set a date using date:

date -s "1 MAY 2013 10:15:00"

or in two commands using formatting characters; first the date:

date +%Y%m%d -s "20130501"

then the time:

date +%T -s "10:15:00"

After that, the hardware clock has to be set (the hardware clock runs independent of the Linux time and of other hardware, powered by a battery). To set the hardware clock to the system clock (since we have already done that above), issue:

hwclock --systohc

Or, as an independent command, to set the hardware clock to local time:

hwclock --set --date="2013-05-01 10:15:00" --localtime

of for UTC:

hwclock --set --date="2013-05-01 10:15:00" --utc

Load Average

The load-average is included in the uptime command:

09:48:35 up 8 days, 7:03, 5 users, load average: 0.24, 0.28, 0.25

The load average numbers are scaled up to the number of CPUs. For example, on a quad-core CPU, the maximal load-average (when all 4 cores are busy) would be 4. The numbers thus represent only a fraction of the total CPU power that is currently being utilised.

Override DHCP Client Nameservers

dhclient is responsible in most Linux distributions for acquiring the DHCP parameters from upstream DHCP servers. The configuration can be altered to not pull name-servers and instead prepend some static name-servers. The configuration has to changed such that the domains a prepended:

prepend domain-name-servers 1.1.1.1, 2.2.2.2;

where 1.1.1.1 and 2.2.2.2 represent name-servers IP addresses.

Next, the domain-name-servers and domain-search directives should be removed from the request clause, the result looking like:

request subnet-mask, broadcast-address, time-offset, routers,

domain-name, host-name,

dhcp6.name-servers, dhcp6.domain-search,

netbios-name-servers, netbios-scope, interface-mtu,

rfc3442-classless-static-routes, ntp-servers;

After a restart dhclient will prepend the specified name-servers and place them in /etc/resolv.conf as well as ignoring the DHCP's settings for the domain-name-servers and domain-search directives.

Using the Temporary Memory Filesystem

The temporary memory filesystem (tmpfs) can be used when you want to temporary store files that will be deleted on the next reboot. This is helpful, for example, when you want to store log-files that are not important over reboots and want to reduce the pressure on the hard-drive.

Adding this entry to /etc/fstab will, for example, mount polipo's cache directory in RAM:

tmpfs /var/cache/polipo tmpfs nodev,noexec,nodiratime,noatime,nosuid,size=5G,async 0 0

using a slab of 5G.

Dynamically Limiting a Processes CPU on Network Idling

This function works together with iptables and the IDLETIMER module in order to limit the CPU consumption of a process (commonly a daemon) when the process does not generate incoming traffic.

#!/bin/bash ########################################################################### ## Copyright (C) Wizardry and Steamworks 2014 - License: GNU GPLv3 ## ## Please see: http://www.gnu.org/licenses/gpl.html for legal details, ## ## rights of fair usage, the disclaimer and warranty conditions. ## ########################################################################### # The function suspends or resumes the named process passed as parameter to # the fuction, provided that iptables has been set-up to create an idle # timer for the named process passed as the parameter to this function. # # For this function to work properly, you should issue: # iptables -A INPUT -p tcp --dport 8085 -j IDLETIMER \ # --timeout 60 --label $process_name # where $process_name is the parameter passed to this function # # This script is best called via crontab to periodically check whether a # proccess's network traffic is stale and to suspend the process if it is. function idlecpulimit { # path to the cpulimit daemon local cpulimit=/usr/bin/cpulimit # percent to throttle to accounting for multiple CPUs # effective throttle = (CPUs available) x throttle local throttle=1 # get the car and cdr of the daemon local car=`echo $1 | cut -c 1` local cdr=`echo $1 | cut -c 2-` # get the daemon if it is running local daemon=`ps ax | grep "[$car]$cdr" | awk '{ print $1 }'` if [ -z $daemon ]; then # just bail if it is not running return; fi # get the PID of the cpulimit daemon for the process local cpulimit_PID=`ps ax | grep '[c]pulimit' | grep $daemon | awk '{ print $1 }'` case `cat /sys/class/xt_idletimer/timers/$1` in 0) # suspend if [ -z $cpulimit_PID ]; then $cpulimit -l $throttle -p $daemon -b >/dev/null 2>&1 fi ;; *) # resume if [ ! -z $cpulimit_PID ]; then kill -s TERM $cpulimit_PID >/dev/null 2>&1 fi ;; esac }

As an example, suppose you had a daemon named mangosd and, as a daemon, it is active when it has inbound connections on port 8085. In that case, you would first add a firewall rule:

iptables -A INPUT -p tcp --dport 8085 -j IDLETIMER --timeout 60 --label mangosd

which will start a countdown timer in /sys/class/xt_idletimer/timers/mangosd when the connection is idle.

After that, you would create a script containing the function above and call it in your script:

function idlecpulimit { ... } idlecpulimit mangosd

The script will then be placed in /etc/cron.d/cron.minutely and will limit or release the CPU limitation when the daemon receives traffic.

Rescue Mount

Suppose that you have made a configuration error and you need to boot from a LiveCD and chroot to the filesystem in order to repair the damage. In that case, you will find that you will need the proc, dev and sys filesystems. These can be mounted by using the bind option of mount:

mount -o bind /dev /mnt/chroot/dev mount -o bind /sys /mnt/chroot/sys mount -o bind /proc /mnt/chroot/proc

Considering that the damaged filesystem is mounted on /mnt/chroot. After the filesystems are mounted, you can chroot to the filesystem and run commands such as update-grub:

chroot /mnt/chroot

Get Communicating MAC Addresses

tcpdump -i eth0 -s 30 -e | cut -f1 -d','

where eth0 is the interface.

Kernel Stack Traceback

For hung processes, the stack traceback can show where the processes are waiting. The CONFIG_MAGIC_SYSRQ must be enabled in the kernel to enable stack tracebacks. If kernel.sysrq is not set to 1 with sysctl, then run:

echo 1 > /proc/sys/kernel/sysrq

Next, trigger the stack traceback by issuing:

echo t > /proc/sysrq-trigger

The results can be found on the console or in /var/log/messages.

Check Processes Listening on IPv6 Addresses

netstat -tunlp |grep p6

Disable IPv6

First edit /etc/hosts to comment out any IPv6 addresses:

# The following lines are desirable for IPv6 capable hosts #::1 ip6-localhost ip6-loopback #fe00::0 ip6-localnet #ff00::0 ip6-mcastprefix #ff02::1 ip6-allnodes #ff02::2 ip6-allrouters

After that, if you are using grub, edit /etc/default/grub and add:

ipv6.disable=1

to the list following GRUB_CMDLINE_LINUX_DEFAULT.

In case you use lilo, edit /etc/lilo.conf instead and modify the append line to include ipv6.disable=1.

Issue update-grub or lilo to make those changes.

You can also add a sysctl setting:

net.ipv6.conf.all.disable_ipv6 = 1

to /etc/sysctl.d/local.conf.

Additionally, in case you are running a system with a bundled MTA such as exim, you should probably keep it from binding to IPv6 addresses.

For exim, edit /etc/exim4/update-exim4.conf.conf and change the dc_local_interfaces to listen only on IPv4:

dc_local_interfaces='127.0.0.1'

and then add:

# Disable IPv6 disable_ipv6 = true

in both /etc/exim4/exim4.conf.template and /etc/exim4/conf.d/main/01_exim4-config_listmacrosdefs and run update-exim4.conf followed by a restart of the service.

Otherwise you might receive the error: ALERT: exim paniclog /var/log/exim4/paniclog has non-zero size, mail system possibly broken failed!.

Clear Semaphores

ipcs can be used to display all semaphores:

ipcs -s

to remove a semaphore by id, issue:

ipcrm sem 2123561

To clear all semaphores for a user, for example, for apache (as user www-data on Debian):

ipcs -s | grep www-data | awk '{ print $2 }' | while read i; do ipcrm sem $i; done

WatchDog Error Messages

Before the watchdog restarts the system, it fires off an email indicating the problem, for example:

Message from watchdog: The system will be rebooted because of error -3!

The error codes can be found in the man page, here is a list of reasons:

-1The system will reboot. Does not indicate an error.-2The system will reboot. Does not indicate an error.-3The load average has reached its maximum specified value.-4Temperature too high.-5/proc/loadavgcontains no data or not enough data.-6The given file was not changed in the given interval.-7/proc/meminfocontent.-8Child process was killed by a signal.-9Child process did not return in time.-10User specified.

Codel over Wondershaper

On recent Linux distributions, Codel can be enabled which is better than wondershaper. This can be done by editing the sysctl configuration file (/etc/sysctl.d/local.conf) and adding the line:

net.core.default_qdisc = fq_codel

for general-purpose routers including virtual machine hosts and:

net.core.default_qdisc = fq

for fat servers.

Granting Users Permissions to Files

Using POSIX ACLs, it is possible to modify permissions to files (even recursively) such that it is no longer necessary to fiddle with the limited Linux user and group permissions. For example, suppose you wanted to allow a user access to a directory without adding them to a group and then separately modifying all the file permissions to allow that group access.

In that case, you would write:

setfacl -R -m u:bob:rwX Share

where:

-Rmeans to recursively change the permissions-mmeans modify (and-xmeans to remove)u:stands for user (andg:for group)bobis the user that we want to grant access torwXmeans read (r), write (w) andX(note the capital case) means to only grant execute permissions in case the file already had execute permissionsShareis the directory (or file) to set the permissions on

The command will thus recursively grant permissions on the file or folder named Share to the user bob allowing bob to read, write and execute the files but only if the file was executable in the first place.

Change the Default Text Editor

The following command will let you pick the default editor:

update-alternatives --config editor

Print all Open Files Sorted By Number of File Handles

find /proc/*/fd -xtype f -printf "%l\n" | grep -P '^/(?!dev|proc|sys)' | sort | uniq -c | sort -n

Reboot Hanging Machine

With a shell open, in order to force a reboot, issue:

echo b > /proc/sysrq-trigger

which instructs the kernel to immediately reboot the system.

This method bypasses any shutdown scripts on the system and also does not flush the disks so it should be used as a very last resort.

Otherwise, in case physical access to the machine is granted, a keyboard is attached and Magic SysRq is enabled in the kernel (enabled by default), then issuing the following combination will reboot the machine more or less gracefully:

Alt+PrtScrn+R+S+E+I+U+B

which will perform, in order:

- R give control back to the keyboard

- S sync

- E sends all processes but

inittheTERMsignal - I sends all processes but the

inittheKILLsignal - U mounts all filesystems to read-only to prevent

fsckat boot - B reboots the system

Check Solid State Drive for TRIM

To check whether an attached SSD currently has TRIM enabled, first mount the drive and change directory to the drive:

cd /mnt/ssd

Now create a file:

dd if=/dev/urandom of=tempfile count=100 bs=512k oflag=direct

and check the fib-map:

hdparm --fibmap tempfile

which will output something like:

tempfile:

filesystem blocksize 4096, begins at LBA 2048; assuming 512 byte sectors.

byte_offset begin_LBA end_LBA sectors

0 383099904 383202303 102400

Now, note the number under begin_LBA (383099904 in this example) and run:

hdparm --read-sector 383099904 /dev/sdc

where:

383099904is the number obtained previously/dev/sdcis the device for the SSD drive

The last command should output a long string of characters for those sectors.

Now, issue:

rm tempfile sync

and repeat the previous hdparm command:

hdparm --read-sector 383099904 /dev/sdc

if now the output consists of only zeroes then automatic TRIM is in place otherwise, wait for a while and run the last hdparm again.

Automatically Mount Filesystems on Demand

On distributions based on systemd, filesystems can be mounted on demand instead of using /etc/fstab in order to let the main system boot while all the requests to the systemd managed filesystems can buffer-up.

Suppose you have a /home partition that you want mounted on demand with systemd. In that case, you can modify the /etc/fstab options to read:

noauto,x-systemd.automount

where noauto prevents Linux from mounting the partition on boot and x-systemd.automount will use systemd to auto-mount the partition on demand.

Additionally, the parameter x-systemd.device-timeout=1min can be added to the mount options which will allow for 1 minute before giving up trying to mount the resource which can be useful for network-mounted devices.

Automatically Reboot after Kernel Panic

In order to have Linux automatically reboot after a kernel panic, add a setting to sysctl - on Debian systems, you will have to edit the file /etc/sysctl.d/local.conf:

kernel.panic = 30 kernel.panic_on_oops = 30

which will make the machine restart in 30 seconds.

List Top Memory Consuming Processes

ps -eo pmem,pcpu,rss,vsize,args | sort -k 1 -r | less

Get the Most Frequently Used Commands

The following snippet pipes the second field from the history command and counts the number of time it appears:

history | awk '{ a[$2]++ } END { for(i in a) { print a[i] " " i } }' | sort -urn | head -n 20

which then gets sorted and the top most 20 results are displayed.

Force a Filesystem Check on Reboot

You can add: fsck.mode=force and fsck.repair=preen to the grub parameter line on reboot in order to trigger a filesystem recheck. Alternatively, if you feel bold, you can add fsck.repair=yes instead of fsck.repair=preen in order to have Linux automatically fix the errors. This is especially useful to recover from a damaged root filesystem.

Enable Multi-Queue Block IO Queuing Mechanism

Edit /etc/default/grub and add:

scsi_mod.use_blk_mq=1

to the kernel command line parameters.

Export Linux Passwords

This helper script can be useful in case you wish to export a bunch of "real" users by scanning the home directory and extracting only users that have a folder inside that directory.

- exportusers.sh

########################################################################### ## Copyright (C) Wizardry and Steamworks 2016 - License: GNU GPLv3 ## ########################################################################### HOMES="/home" FILES="/etc/passwd /etc/passwd- /etc/shadow /etc/shadow-" ls -l $HOMES | awk '{ print $3 }' | sort -u | while read u; do for file in $FILES; do cat $file | while read p; do ENTRY=`echo $p | awk -F':' '{ print $1 }'` if [ "$ENTRY" == "$u" ]; then echo $p >> `basename $file` fi done done done

When the script runs, it will scan all folders under the /home directory, grab the users that the folders belong to and scan the Linux password files (/etc/passwd, /etc/passwd-, /etc/shadow and /etc/shadow-) for entries for that user. It will then generate Linux password files from the matching home directories that can be later inserted into the Linux password files of a different machine.

Create Sparse Image of Device

dd dumps an entire device but has no options that are aware of the number of zeroes on the device. The following command:

dd if=/dev/sda | cp --sparse=always /dev/stdin image.img

will create an image named image.img of the device /dev/sda such that the image file will not contain any zeroes.

To check that the image was created successfully, you can then issue:

md5sum image.img

and

md5sum /dev/sda

and check that the hashes are identical.

Bind to Reserved Ports as Non-Root User

Binding to reserved ports (ports under 1024) can be done under Linux by issuing:

setcap 'cap_net_bind_service=+ep' /path/to/binary

The SystemD equivalent is to add:

CapabilityBoundingSet=CAP_NET_BIND_SERVICE AmbientCapabilities=CAP_NET_BIND_SERVICE

to the daemon service file.

Mount Apple Images

DMG files are usually compressed; in fact, if you issue in a terminal:

file someimage.dmg

you may get output such as:

someimage.dmg: bzip2 compressed data, block size = 100k

indicating a bzip2 compressed file, or:

someimage.dmg: zlib compressed data

You can then uncompress the DMG image under Linux by issing:

bzip -dc someimage.dmg > someimage.dmg.uncompressed

Now, if you inspect the uncompressed image (in this example someimage.dmg.uncompressed):

file someimage.dmg.uncompressed

you will get some interesting info such as:

someimage.dmg.uncompressed: Apple Driver Map, blocksize 512, blockcount 821112, devtype 0, devid 0, descriptors 0, contains[@0x200]: Apple Partition Map, map block count 3, start block 1, block count 63, name Apple, type Apple_partition_map, contains[@0x400]: Apple Partition Map, map block count 3, start block 64, block count 861104, name disk image, type Apple_HFS, contains[@0x600]: Apple Partition Map, map block count 3, start block 811148, block count 4, type Apple_Free

indicating an uncompressed image.

To convert the DMG into an image that can be mounted, you can use the tooldmg2img:

dmg2img someimage.dmg someimage.dmg.uncompressed

You can now mount the image using the HFS+ filesystem:

mount -t hfsplus -o ro someimage.dmg.uncompressed /mnt/media

Purge all E-Mails from Command-Line

To purge all inbox e-mails on Linux from the command line, you can use the mail command with the following sequence of instructions:

d * q

where:

mailis the mail reader program,d *instructsmailto delete all messages,qtellsmailto quit

Remount Root Filesystem as Read-Write

There are cases where a Linux system boots with the root / mounted as read-only. This can occur for various reasons but the standard way of recovering is to issue:

mount -o remount,rw /

which should mount the root filesystem in read-write mode.

However, assuming that you have bad options in /etc/fstab, that will not work and you will get errors in dmesg along the lines of:

Unrecognized mount option ... or missing value

this is due to mount reading /etc/fstab when you do not specify the source and the target. To work around the problem, you can mount the root manually by specifying both:

mount -t ext4 /dev/vda1 / -o remount,rw

which should give you enough leverage to adjust the entries in your /etc/fstab file.

Enable Metadata Checksumming on EXT4

Metadata checksumming provides better data safety protection - you will need e2fsprogs version 1.43 or beyond. On Debian you can check your current e2fsprogs with apt-cache policy e2fsprogs and upgrade to unstable or testing if needed.

On new systems, to enable metadata checksumming at format time, you would issue:

mkfs.ext4 -O metadata_csum /dev/sda1

where:

/dev/sda1is the path to the device to be formatted as EXT4

On existing systems, the filesystem must be unmounted first (using a LiveCD, for instance). With the filesystem unmounted and assuming that /dev/sda1 contains the EXT4 filesystem for which metadata checksumming is to be enabled, issue:

e2fsck -Df /dev/sda1

in order to optimise the filesystem; followed by:

resize2fs -b /dev/sda1

to convert the filesystem to 64bit and finally:

tune2fs -O metadata_csum /dev/sda1

to enable metadata checksumming.

If you want, you can see the result by issuing:

dumpe2fs -h /dev/sda1

Now that metadata checksumming is enabled, you may have some performance gain by adding the a module to initrd called crypto-crc32c that will enable hardware acceleration for the CRC routines. On Debian, adding the crypto-crc32c module to initrd is a matter of editing a file and rebuilding the initramfs.

Get a List of IMAP Users from Logs

The following command will read in /var/log/mail.log and compile a list of unique IMAP users.

cat /var/log/mail.log | \ grep imap-login:\ Login | \ sed -e 's/.*Login: user=<\(.*\)>, method=.*/\1/g' | sort | uniq

Disable Console Blanking

To disable the linux console blanking (turning off), the following methods can be mentioned:

- append

consoleblank=0to the linux kernel parameters (ie: edit/etc/default/grubon Debian), - issue

setterm -blank 0 -powerdown 0on the console to turn off blanking on, - issue

echo -ne "\033[9;0]" >/dev/ttyX; whereXis the console number to turn off blanking for, - issue

echo -ne "\033[9;0]" >/etc/issueto turn off blanking (/etc/issueis loaded on console boot).

Note that setterm -blank 0 and echo -ne "\033[9;0]" are equivalent such that you can redirect both their output to a tty device.

Manipulating Linux Console

Most console-oriented commands that are meant to work on virtual terminals expect a proper terminal to be set up and to be executed on a virtual terminal. The openvt command can be used to execute a program on the Linux virtual terminal. For instance, to force the screen to blank whilst being logged-in via an SSH session (/dev/pts), issue:

TERM=linux openvt -c 1 -f -- setterm -blank force

where:

TERM=linuxsets the terminal type tolinuxotherwise the terminal type of the terminal used for the SSH session is going to be assumed,openvtmakes the command run on a virtual terminal,1refers to/dev/tty1,-fforces the command to run even if the virtual terminal is occupied (this is by default the case for login terminals),

--is the separator betweenopenvtparameters and the command to be executed,settermis the command to execute and,-blank forceinstructs the terminal to blank.

Resetting USB Device from Command Line

Wireless USB, by consequence, has brought to Linux the capability of simulating an USB disconnect and reconnect - this is particularly useful if the device is connected on the inside of the machine such that the device cannot be removed (even logically) because it cannot be replugged.

The first step is to identify the device you want to reset by issuing:

lsusb

and checking the column with the device ID. For instance, you would want to reset the device:

Bus 001 Device 007: ID 05b7:11aa Canon, Inc.

such that the relevant bit to retain is the vendor ID 05b7 and the product id 11aa.

Next, locate the device on the USB HUB by issuing:

find -L /sys/bus/usb/devices/ -maxdepth 2 -name id* -print -exec cat '{}' \; | xargs -L 4

and then locate the /sys path to the device you would like to reset. In this case, the line matching the vendor and product ID would be:

/sys/bus/usb/devices/1-8/idProduct 11aa /sys/bus/usb/devices/1-8/idVendor 05b7

Finally deauthorize the device by issuing:

echo 0 >/sys/bus/usb/devices/1-8/authorized

and re-authorize the device by issuing:

echo 1 >/sys/bus/usb/devices/1-8/authorized

The above is sufficient to trigger and udev hotplug event - in case you are debugging udev scripts.

Set CPU Governor for all CPUs

The following command will set the CPU governor to powersave for all CPUs installed in the system:

for i in `find /sys/devices/system/cpu/cpu[0-9]* -type d | awk -F'/' '{ print $6 }' | sort -g -k1.4,1 -u | cut -c 4-`; do cpufreq-set -c $i -g powersave; done

Enable Persistent Journal Logging

From man (8) systemd-journald:

mkdir -p /var/log/journal systemd-tmpfiles --create --prefix /var/log/journal

Home Folder Permissions

711if you dont want to add groups as well or

751so that public can't read your home directory

Correct /etc/hosts Setup

In the event that Linux decides to answer with an IPv6 address when pinging localhost, for example:

PING localhost(localhost (::1)) 56 data bytes 64 bytes from localhost (::1): icmp_seq=1 ttl=64 time=0.226 ms 64 bytes from localhost (::1): icmp_seq=2 ttl=64 time=0.291 ms 64 bytes from localhost (::1): icmp_seq=3 ttl=64 time=0.355 ms 64 bytes from localhost (::1): icmp_seq=4 ttl=64 time=0.353 ms

then the issue is an incorrect setup of /etc/hosts - notably, the IPv6 addresses are not setup correctly and Linux answers with the IPv6 equivalent address of localhost.

Open /etc/hosts and modify the IPv6 section to contain the following:

# The following lines are desirable for IPv6 capable hosts ::1 ip6-localhost ip6-loopback fe00::0 ip6-localnet ff00::0 ip6-mcastprefix ff02::1 ip6-allnodes ff02::2 ip6-allrouters ff02::3 ip6-allhosts

and all services should start working properly again.

Disk Dump with Progress

On newer Linux systems, the command:

dd if=/dev/xxx of=/dev/yyy bs=8M status=progress

will display progress status whilst copying. Unfortunately, that does not include a convenient progress bar to check for completion.

Alternatively, the corresponding command:

pv -tpreb /dev/xxx | dd of=/dev/yyy bs=8M

will use pv and display a progress bar.

Disable Spectre and Meltdown Patches

Add to the command line in /etc/default/grub, the kernel parameters:

nopti kpti=0 noibrs noibpb l1tf=off mds=off nospectre_v1 nospectre_v2 spectre_v2_user=off spec_store_bypass_disable=off nospec_store_bypass_disable ssbd=force-off no_stf_barrier tsx_async_abort=off nx_huge_pages=off kvm.nx_huge_pages=off kvm-intel.vmentry_l1d_flush=never mitigations=off

and execute:

update-grub

After a reboot, the patches should be disabled and the performance will be back!

Allow Binding Privileged Ports

setcap 'cap_net_bind_service=+ep' /path/to/program

where:

programis an _executable_ - not a script.

Determining the Last Power On Method

dmidecode can be used to retrieve BIOS information and, amongst which, can also tell what the last power on method has been:

dmidecode -t system | grep 'Wake-Up Type'

will print the last wake-up type.

Issues with Stuck Cores

It may happen that logs fill up with messages indicating that some power management policy cannot be enforced on a given CPU core:

cpufreqd: cpufreqd_loop : Cannot set policy, Rule unchanged ("none").

cpufreqd: cpufreqd_set_profile : Couldn't set profile "Performance High" set for cpu4 (100-100-performance)

It may be that the CPU core is simply stuck and may need replugging. The following two commands will take the CPU offline and the next one will start the CPU back up:

echo "0" > /sys/devices/system/cpu/cpu4/cpufreq/online echo "1" > /sys/devices/system/cpu/cpu4/cpufreq/online

In doing so, the power management issue seems to be resolved.

Automatically Add all RNDIS Devices to a Bridge

Edit or create the file at /etc/udev/rules.d/70-persistent-net.rules with the following contents:

SUBSYSTEM=="net", ACTION=="add", ATTRS{idProduct}=="a4a2", ATTRS{idVendor}=="0525", RUN+="/bin/sh -c '/sbin/ip link set dev %k up && /sbin/brctl addif br0 %k'"

where:

br0is the interface name of the bridge that the RNDIS devices will be added to.

followed by the command:

udevadm control --reload

to reload all udev rules.

The reason this works is due to a4a2 and 0525 respectively being the identifiers for the RNDIS driver and not for the device itself. For instance, by issuing:

udevadm info -a /sys/class/net/usb0

will show at the top the RNDIS device without any identifiers whereas the parent RNDIS/Ethernet Gadget matches the identifiers.

One usage case for this rule is to connect a bunch of RNDIS devices to an USB hub and have them join the network automatically as they are hotplugged; for instance, Raspberry Pis can be configured as USB gadgets and then connected to an USB hub.

Scraping a Site Automatically using SystemD

FTP sites can be scraped elegantly by using systemd and tmux on Linux. By starting a tmux detached terminal, wget can run in the background and download a website entirely whilst also allowing the user to check up on the progress by manually attaching and detaching from tmux.

The following script contains a few parameters underneath the Configuration comment and up to Internals in order to set:

- the download path (

DOWNLOAD_DIRECTORY), - the

wgetdownload URL (all protocols supported bywgetsuch as FTP or HTTP) (DOWNLOAD_URL), - a descriptive name for the

tmuxsession (TMUX_SESSION_NAME)

[Unit] Description=Scrape FTP Site Requires=network.target local-fs.target remote-fs.target After=network.target local-fs.target remote-fs.target [Install] WantedBy=multi-user.target [Service] # Configuration Environment=DOWNLOAD_DIRECTORY="/path/to/directory" Environment=DOWNLOAD_URL="ftp://somesite.tld/somedirectory" Environment=TMUX_SESSION_NAME="somesite.tld-download" # Internals Type=oneshot KillMode=none User=root ExecStartPre = -/bin/mkdir -p \""$DOWNLOAD_DIRECTORY"\" ExecStart=/usr/bin/tmux new-session -d -c "\"$DOWNLOAD_DIRECTORY\"" -s "$TMUX_SESSION_NAME" -n "$TMUX_SESSION_NAME" "/usr/bin/wget -c -r \"$DOWNLOAD_URL\" -P \"$DOWNLOAD_DIRECTORY\"" ExecStop=/usr/bin/tmux send-keys -t "$TMUX_SESSION_NAME" C-c RemainAfterExit=yes

The file should be placed under /etc/systemd/system, then systemd has to be reloaded by issuing systemctl daemon-reload, the service should then be loaded with systemctl enable SERVICE_FILE_NAME where SERVICE_FILE_NAME is the name of the file copied into /etc/systemd/system and finally started by issuing systemctl start SERVICE_FILE_NAME.

Upon every reboot, the service file will create a detached tmux terminal and start scraping files from the URL.

Access Directory Underneath Mountpoint

In order to access a directory underneath a mountpoint without unmounting, create a bind mount of the root filesystem to a directory and then access the content via the bind mount.

Ie, to access the contents of the directory /mnt/usb on top of which a filesystem has been mounted, create a directory:

mkdir /mnt/root

and create a bind mount:

mount -o bind / /mnt/root

Finally access the original underlying content via the path /mnt/root/mnt/usb2.

Self-Delete Shell Script

rm -rf -- "$@"

Resize Last Partition and Filesystem in Image File

Assuming that an image file is available and named, for example, raspios.img then the following procedure will extend the last partition and the filesystem by a given size.

First, extend the image file raspios.img itself with zeroes:

dd if=/dev/zero bs=1M count=500 >> raspios.img

where:

1Mis the block size,500is the amount of blocks to copy over

In this case, the image file raspios.img is extended by  . Alternatively,

. Alternatively, qemu-img can be used to extend the image file:

qemu-img resize raspios.img +500M

The next step is to run parted and extend the last partition inside the image file. Open the image file with parted:

parted raspios.img

and then resize the partition, for example:

(parted) resizepart 2 100%

where:

2is the partition number

The parted command will resize the second partition to fill 100% of the available space (in this example, it will extend the second partition by  ).

).

The final step is to enlarge the filesystem within the second partition that has just been extended by  .

. kpartx will create mapped devices for each of the partitions contained within the raspios.img image file:

kpartx -avs raspios.img

First, the existing filesystem has to be checked:

e2fsck -f /dev/mapper/loop0p2

where:

/dev/mapper/loop0p2is the last partition reported bykpartx

and then finally the filesystem is extended to its maximum size:

resize2fs /dev/mapper/loop0p2

Delete Files Older than X Days

find /path -mtime +N -delete

where:

Nis the number of days

Elusive Errors from Crontab

Sometimes the error:

/bin/sh: 1: root: not found

might be reported by cron.

The reason might be that an user ran crontab /etc/crontab in which case separate crontabs would have been created at /var/spool/cron/crontabs/. To remedy the situation, simply delete /var/spool/cron/crontabs/ and reload the cron daemon.

Clear Framebuffer Device

The following command will clear a 1024x768 resolution Linux framebuffer:

dd if=/dev/zero count=$((1024 * 768)) bs=1024 > /dev/fb0

Adding Mount Point Dependencies to SystemD Service Files

To get a list of filesystems that are configured (ie: via /etc/fstab), issue:

systemctl list-units | grep '/path/to/mount' | awk '{ print $1 }'

The command will return a list of mount units all ending in .mount.

Edit the SystemD service file in /etc/systemd/system/ and add:

After=... FS.MOUNT Requires=... FS.MOUNT

where:

FS.MOUNTis the mount unit retrieved with the previous command

Detaching a Crytpsetup Header from an Existing Encrypted Disk

Creating an encrypted container with a detached header adds some plausible deniability since the partition or drive signature will not be observable to someone obtaining the disk drive.

An encrypted volume with a detached header can be created using the cryptsetup utility on Linux but the question is whether the header can be "detached" at a time later than the creation time. Fortunately, the encrypted drive header is very straightforward and compares easily with any other filesystem header that resides at the start of the disk such that "detaching" the header involves dumping the header to a file and deleting the header from the disk drive.

Given an encrypted disk drive recognized as /dev/sdb on Linux, the first operation would be to dump the header:

cryptsetup luksHeaderBackup /dev/sdb --header-backup-file /opt/sdb.header

Next, the /dev/sdb drive has to be inspected in order to find out how large the LUKS header is:

cryptsetup luksDump /dev/sdb

which will print out something similar to the following:

LUKS header information

Version: 2

Epoch: 3

Metadata area: 12475 [bytes]

Keyslots area: 18312184 [bytes]

UUID: 26e2b280-de17-6345-f3ac-2ef43682faa2

Label: (no label)

Subsystem: (no subsystem)

Flags: (no flags)

Data segments:

0: crypt

offset: 22220875 [bytes]

length: (whole device)

cipher: aes-xts-plain64

sector: 512 [bytes]

Keyslots:

0: luks2

Key: 256 bits

...

The important part here is the offset:

...

Data segments:

0: crypt

offset: 22220875 [bytes]

length: (whole device)

...

22220875 is the number of bytes from the start of the disk representing the length of the header.

The next step is thus to delete the header:

dd if=/dev/zero of=/dev/sdb bs=22220875 count=1

where:

22220875is the length of the header

Finally, the disk can be opened using cryptsetup and by providing the header file created previously at /opt/sdb.header:

cryptsetup luksOpen --header /opt/sdb.header /dev/sdb mydrive

The command should now open the drive with the header detached and placed at /opt/sdb.header.

Block Device Transfers over the Network

When transferring large files over the network the following considerations must be observed:

- encryption - whether encryption is necessary or not; encryption will slow down a transfer particularly if there is no hardware acceleration available,

- compression - depending on the files being transferred, compression can reduce the amount of data being transferred; nevertheless, in case compression uses a single CPU, the CPU will become the bottleneck during transfer

For instances, with both encryption and compression, the following commands executed on the client and the server will transfer /dev/device over the network.

On the server (receiver), issue:

nc -l -p 6500 | \ # listens on port 6500 openssl aes-256-cbc -d -salt -pass pass:mysecret | \ # decrypts with password mysecret pigz -d | \ # decompresses dd bs=16M of=/dev/device # writes the stream to /dev/device

where:

aes-256-cbcis the symmetric cipher to use (executecryptsetup benchmarkfor example to get a speed estimate of the available ciphers and hopefully find a hardware accelerated cipher),pigzis a parallel gzip tool that will make use of all CPUs, avoiding thereby for the (de)compression to become a CPU bottleneck,

on the client (sender), issue:

pv -b -e -r -t -p /dev/device | # reads from /dev/device (with stats) pigz -1 | \ # compresses the stream openssl aes-256-cbc -salt -pass pass:mysecret | \ # encrypts with password mysecret nc server.lan 6500 -q 1 # connects to server.lan on port 6500

where:

-b -e -r -t -pare all flags that turn on, in turn:-ba byte counter that will count the number of bytes read,-eturns on an estimated ETA for reading the entire device,-rthe rate counter and will display the current rate of data transfer,-ta timer that will display the total elapsed time spent reading,-pwill enable a progress bar,

-q 1indicates thatncshould terminate after one second in caseEOFhas been reached while reading/dev/device

Alternatively, if no compression or encryption is desired, the Network Block Device (NBD) might be more convenient.

Determine if System is Big- or Little Endian

echo -n I | hexdump -o | awk '{ print substr($2,6,1); exit}'

will display:

0on a big endian system,1on a little endian system

Network Emulation and Testing using Traffic Control

The traffic shaper (tc) built into the Linux kernel can be used in order to perform network testing - in particular, granting the ability to simulate packet delay, packet loss, packet duplication, packet corruption, packet reordering as well as rate control.

A simple setup would look like the following where a Linux gateway will be NATing a client machine A to the Internet and, at the same time, the Linux gatway will be using traffic shaping to delay the packets sent by the client machine A.

IP: a.a.a.a +---+ eth0 +---------+ eth1 | A +-------------->+ Gateway +--------------> Internet +---+ +---------+

Using traffic shaping, the following commands can be used to induce a delay for all packets originating from client A and then forwarded to the Internet:

tc qdisc del dev eth1 root tc qdisc add dev eth1 handle 1: root htb tc class add dev eth1 parent 1: classid 1:15 htb rate 100000mbit tc qdisc add dev eth1 parent 1:15 handle 20: netem delay 4000ms tc filter add dev eth1 parent 1:0 prio 1 protocol ip handle 1 fw flowid 1:15

netem will take care of delaying the packets, following this example, by a constant rate of  per packet. Once the classes have been established, a filter is set up to match all packets marked by

per packet. Once the classes have been established, a filter is set up to match all packets marked by iptables and push them through qdisc.

iptables can then be used to mark packets and send them through tc:

iptables -t mangle -A FORWARD -s a.a.a.a -j MARK --set-mark 1

where:

1is the mark established by thetcfilter command.

In other words, when packets arrive from client A at IP a.a.a.a on the interface eth0 to the Linux gateway, the packets will be marked with the label 20. When the packets need to be sent out to the Internet over interface eth1, all packets originating from a.a.a.a will be pushed through the traffic shaper, by following the filter marked with handle 20, and then through the classifers and delayed with netmem by  each.

each.

The following schematic illustrates the traffic control setup achieved using the commands written above:

root 1: root HTB (qdisc)

|

1:15 HTB (class)

|

20: netem (qdisc)

and it can be displayed by issuing the command:

tc -s qdisc ls dev eth1

that would result in the following output:

qdisc htb 1: root refcnt 2 r2q 10 default 0 direct_packets_stat 23 direct_qlen 1000

Sent 3506 bytes 23 pkt (dropped 0, overlimits 0 requeues 0)

backlog 0b 0p requeues 0

qdisc netem 20: parent 1:15 limit 1000 delay 5s

Sent 0 bytes 0 pkt (dropped 0, overlimits 0 requeues 0)

backlog 0b 0p requeues 0

Given this setup, the traffic shaper tc has to be set up only once and then iptables marking can be leveraged to selectively mark packets that have to be delayed. Using both iptables and tc is somewhat more flexible in terms of separation of concerns. iptables is used to perform packet matching and then tc is used to induce all kinds of effects supported by netem on the marked packets.

At any point in time, a single tc command can be used to change the induced delay by modifying the queueing discipline. For instance, by issuing the command:

tc qdisc change dev eth1 parent 1:15 handle 20: netem delay 10ms

any new packets will be delayed by  instead of

instead of  . In case there are other packets in the queue, previously having been delayed by

. In case there are other packets in the queue, previously having been delayed by  , then the packets will not be flushed and they will arrive in due time.

, then the packets will not be flushed and they will arrive in due time.

As a side-note, there is a certain degree of overlap in features between iptables and the network emulator netem. For instance, the following iptables command:

iptables -t mangle -A FORWARD -m statistic --probability 0.5 -s a.a.a.a -j DROP

will achieve the same effect as using the traffic shaper tc network emulator netem and induce a  loss of packets:

loss of packets:

tc qdisc del dev eth1 root tc qdisc add dev eth1 handle 1: root htb tc class add dev eth1 parent 1: classid 1:15 htb rate 10000mbps tc qdisc add dev eth1 parent 1:15 handle 20: netem loss 0.5% tc filter add dev eth1 parent 1:0 prio 1 protocol ip handle 1 fw flowid 1:15 iptables -t mangle -A FORWARD -s a.a.a.a -j MARK --set-mark 1

The exact same effect can be achieved just using the traffic shaper tc, the network emulator netem and without iptables:

tc qdisc del dev eth1 root tc qdisc add dev eth1 handle 1: root htb tc class add dev eth1 parent 1: classid 1:15 htb rate 10000mbps tc qdisc add dev eth1 parent 1:15 handle 20: netem loss 0.5% tc filter add dev eth1 parent 1:0 protocol ip prio 1 u32 match ip src a.a.a.a/24 flowid 1:15

All the variants above will randomly drop half the forwarded packets on average originating from the IP address a.a.a.a.

The difference between using tc and iptables, aside from different features, is that tc works directly on the queues for each physical interface such that processing with tc on egress or ingress takes place before the packets can be manipulated with iptables.

Encoding binary Data to QR-code

The following command:

cat Papers.key | qrencode -o - | zbarimg --raw -q -1 -Sbinary - > Papers.key2

will:

- pipe the contents of the file

Papers.key, - create a QR code image from the data,

- read the QR code from the image and write it to

Papers.key2

effectively performing a round-trip by encoding and decoding the binary data contained in Papers.key.

Alternatively, since binary data might not be properly handled by various utilities, the binary data can be armored by using an intermediary base64 encoder. In other words, the following command:

cat Papers.key | base64 | qrencode -o - > Papers.png

will:

- pipe the contents of the file

Papers.key, - base64 encode the data,

- generate a QR code image file

Papers.png

Then, in order to decode, the following command:

zbarimg --raw -q -1 Papers.png | base64 -d > Papers.key

will:

- read the QR code,

- decode the data using base64,

- output the result to the file

Papers.key

Fixing Patch with Different Line Endings

The general procedure is to make line endings the same for both the patch and the files to be patched. For instance, to normalize the line endings for all the files included in a patch:

grep '+++' dogview.patch | awk '{ print $2 }' | sed 's/b\///g' | xargs dos2unix

followed by normalizing the line endings for dogview.patch:

dos2unix dogview.patch

After which, the patch can be applied:

patch -p1 < dogview.patch

Sending Mail from the Linux Command Line using External Mail Servers

The current options seem to be to use the following programs:

s-nail(formerly,nail),curl,ssmtp(not covered here becausessmtpseems to be an MTA and not an MDA such that it is not useful for these examples)

As a general pitfall, note that the following error shows up frequently for various online example calls of the commands:

could not initiate TLS connection: error:1408F10B:SSL routines:ssl3_get_record:wrong version number

when issuing the commands above.

More than often, in case that a TLS connection has to be made via STARTTLS, the problem is that the connection has to be first established in plain-text and only after issuing the STARTTLS command, the TLS protocol is negotiated between the client and the server. What happens is that most examples for various commands such as the ones above will tell the user to specify connection strings for TLS such as:

smtps://USERNAME:PASSWORD@MAILSERVER:PORT

where smtps would hint to encyption when, given the STARTTLS protocol, there should be no encryption when the connection is established. The usual fix is to replace smtps by smtp and make sure that the client actually issues STARTTLS and then proceeds to encryption.

S-Nail

Using s-nail the command options would be the following:

s-nail -:/ \ -Sv15-compat \ -S ttycharset=utf8 \ -S mta='smtp://USERNAME:PASSWORD@MAILSERVER:PORT' \ -S smtp-use-starttls -S smtp-auth=login \ -S from=SENDER \ -S subject=test -end-options RECIPIENT

where:

USERNAMEis the username of the account, for instance, foroutlook.com, the username is the entire E-Mail of the account; additionally, note that any special characters must be URI encoded,PASSWORDis the URI encoded password for the account,MAILSERVERis the E-Mail server host,PORTis the E-Mail server port,SENDERis the envelope sender (your E-Mail),RECIPIENTis the destination

cURL

curl \

--ssl-reqd \

--url 'smtp://MAILSERVER:PORT/' \

--mail-from 'SENDER' \

--mail-rcpt 'RECIPIENT' \

--user USERNAME:PASSWORD \

-v

-T mail.txt

and mail.txt has the following shape:

From: SENDER To: RECIPIENT Subject: SUBJECT BODY

where:

USERNAMEis the username of the account, for instance, foroutlook.com, the username is the entire E-Mail of the accountPASSWORDis the password for the account,MAILSERVERis the E-Mail server host,PORTis the E-Mail server port,SENDERis the envelope sender (your E-Mail),RECIPIENTis the destination,SUBJECTis the subject for the E-Mail,BODYis the body of the E-Mail

Note that it is not necessary to use an additional file such as mail.txt for the E-Mail and it is possible to pipe the contents of the mail.txt from the command line by replacing -T mail.txt by -T - indicating that the E-Mail will be read from standard input. For example:

echo "From: SENDER\nTo: RECIPIENT\nSubject: SUBJECT\n\nBODY" | curl \ --ssl-reqd \ --url 'smtp://MAILSERVER:PORT/' \ --mail-from 'SENDER' \ --mail-rcpt 'RECIPIENT' \ --user USERNAME:PASSWORD \ -v -T -

Quickly Wipe Partition Tables with Disk Dumper

Partition tables can be zapped quickly using dd.

MBR

dd if=/dev/zero of=/dev/sda bs=512 count=1

where:

/dev/sdais the drive to wipe the partition table for,512is the amount of bytes to write from the start of the disk,1means writingbsamount of bytes this number of times

The byte count is calculated as  bootstrap +

bootstrap +  partition table +

partition table +  signature =

signature =  .

.

GPT

GPT preserves an additional table at the end of the device, such that wiping the partition involves two commands:

- wipe the table at the start of the drive,

- wipe the backup table at the back of the drive

The following commands should accomplish that:

dd if=/dev/zero of=/dev/sda bs=512 count=34 dd if=/dev/zero of=/dev/sda bs=512 count=34 seek=$((`blockdev --getsz /dev/sda` - 34))

where:

/dev/sdais the drive to wipe the partition table for

Options when Referring to Block Devices by Identifier Fail

On modern Linux systems, referring to partitions is done via the partition UUID instead of referring to the actual block device. One problem that will show up sooner or later is that in order to be able to generate a partition UUID, a block device must have partitions in the first place. Similarly, one can mount partitions via their disk labels, yet that will fail as well when a disk does not even have a partition table. This case is typical for whole drive encryption with LUKS where no label or partition table is even desirable and not only an oversight.

Assuming that the block device /dev/sda is part of a larger storage framework that, when initialized, does not even set a marker, create a partition table or a partition on the block device , the command:

blkid

will fail to list /dev/sda with any UUID. Now, assuming that there are several block device in similar situations such as /dev/sdb, /dev/sdc, etc, then when Linux will reboot, there will be no guarantee that the block device files will refer to the same drives.

To work around this issue udev can be leveraged and ]/fuss/udev#creating_specific_rules_for_devices|rules can be written in order to match the hard-drives]] at detect time and then create symlinks to the hard-drives that should be stable over reboots.

For instance, issuing:

udevadm info -q all -n /dev/sda --attribute-walk

will output all the attributes of /dev/sda and a few of those can be selected in order to construct an udev rule.

For instance, based on the output of the command a file is created at /etc/udev/rules.d/10-drives.rules with the following contents:

SUBSYSTEM=="block", ATTRS{model}=="EZAZ-00SF3B0 ", ATTRS{vendor}=="WDC WD40", SYMLINK+="western"

This rule will now match:

- within the

blockdevice subsystem, - model name

EZAZ-00SF3B0as reported by the hardware, - vendor name

WDC WD40

and once matched will create a symbolic link named western within the /dev/ filesystem that will point to whatever hardware device file the kernel generated for the drive.

Now, it becomes easy to mount the drive using fstab because the symlink will be stable over reboots, guaranteeing that the /dev/western link will always point to the correct drive. The line in /etc/fstab would look similar to the following:

/dev/western /mnt/western ext4 defaults 0 0

where /dev/western is the source device symbolic link generated by udev on boot.

Setting Interface Metric

One very typical scenario that definitely would need setting interface metric would be the case of a laptop that has both Ethernet and wireless connections with both connections established to the local network. Linux does not automatically sense the fastest network connection such that interface metrics should be established for all network interfaces.

Typically, for Debian (or Ubuntu) Linux distributions, ifupdown is used to manage network interfaces and the ifmetric package can be installed using:

apt-get install ifmentric

By installing the ifmetric package, a new metric option is now available that can be added to configured network interfaces in /etc/network/interfaces or /etc/network/interfaces.d. For instance, one can set the metric to 1 for eth0 (the Ethernet interface) and 2 for wlan0 (the wireless interface), by editing the ifupdown interface file:

iface eth0 inet manual

metric 1

mtu 9000

allow-hotplug wlan0

iface wlan0 inet dhcp

metric 2

wpa-conf /etc/wpa_supplicant/wpa_supplicant.conf

Now, provided that both eth0 and wlan0 are on the same network, eth0 will be the preferred interface to reach the local network.

Reordering Partitions

It might so happen that device numbers end up skwed after adding or removing partitions such that the alphanumeric name (sda1, sdb2, etc) does not correspond to the contiguous partition layout. The partition indicators corresponding to the device names can be reordered using the fdisk tool by entering the expert menu x and then pressing f to automatically change the names to correspond to the partition layout.

Multiplexing Video Device

One problem with Video4Linux is that multiple processes cannot access the same hardware at the same time. This seems to be mightily problematic when it boils down to video devices that have to be read concurrently in order to perform various operations such as either streaming or taking a screenshot where one or the other operations would disrupt the other.

Fortunately, there is a third-party kernel module called v4l2loopback that, on its own, does nothing but create "virtual" v4l devices to which data can be written and then read by other programs.

In order to use v4l2loopback, on Debian the kernel module can be installed through DKMS, by issuing:

apt-get install v4l2loopback-dkms v4l2loopback-utils

thereby ensuring that the kernel module will be automatically recompiled after a kernel upgrade.

First, the module would have to be loaded upon boot, such that the file /etc/modules-load.d/v4l2loopback.conf has to be created with the following contents:

v4l2loopback

Creating the /etc/modules-load.d/v4l2loopback.conf now ensures that the module is loaded on boot, but additionally some parameters can be added to the loading of the kernel module by creating the file at /etc/modprobe.d/v4l2loopback.conf with the following contents:

options v4l2loopback video_nr=50,51 card_label="Microscope 1,Microscope 2"

where:

video_nr=50,51will create two virtual V4L devices, namely/dev/video50and respectively/dev/video51,Microscope 1andMicroscope 2are descriptive labels for the devices.

Now, the following will be accomplished:

That is, textually, a V4L device with its corresponding V4L device name at /dev/video0 will be multiplexed to two virtual V4L devices, /dev/video50 and /dev/video51 respectively in order to allow two separate simultaneous reads from both /dev/video50 and /dev/video51 devices.

In order to accomplish the multiplexing, given that v4l2loopback has already been set up, a simple command line suffices, such as:

cat /dev/video0 | tee /dev/video50 /dev/video51

that will copy video0 to video50 and video51.

However, more elegantly and under SystemD, a service file can be used instead along with ffmpeg:

[Unit] Description=Microscope Clone After=multi-user.target Before=microscope.service microscope_button.service [Service] ExecStart=/usr/bin/ffmpeg -hide_banner -loglevel quiet -f v4l2 -i /dev/video0 -codec copy -f v4l2 /dev/video50 -codec copy -f v4l2 /dev/video51 Restart=always RestartSec=10 StandardOutput=syslog StandardError=syslog SyslogIdentifier=microscope User=root Group=root Environment=PATH=/usr/bin/:/usr/local/bin/ [Install] WantedBy=microscope.target

The service file is placed inside /etc/systemd/system and uses ffmpeg to copy /dev/video0 to /dev/video50 and /dev/video51. Interestingly, because ffmpeg is used, it is also entirely possible to apply video transformations to one or the other multiplexed devices or, say, to seamlessly transform the original stream for both.

Retrieve External IP Address

dig -a 192.168.1.2 +short myip.opendns.com @resolver1.opendns.com

where:

192.168.1.2is the local IP address of the interface that connects to the router

Alternatively, for one single external interface, the -a 192.168.1.2 parmeter and option can be omitted.

Substitute for ifenslave

Modernly, Linux does not use the ifenslave utility in order to create bonding devices and to add slaves. For instance, the ifenslave Debian package just contains some helper scripts for integrating with ifupdown. The new way of managing bonding is to use the sysfs filesystem and write to files.

Creating a bonding interface can be accomplished by:

echo "+bond0" >/sys/class/net/bonding_masters

where:

bond0is the bonding interface to create

respectively:

echo "-bond0" >/sys/class/net/bonding_masters

in order to remove a bonding interface.

Next, slaves to the bonding interface can be added using (assuming bond0 is the bonding interface):

echo "+ovn0"> /sys/class/net/bond0/bonding/slaves

where:

ovn0is the interface to enslave to the bonding interfacebond0

respectively:

echo "-ovn0"> /sys/class/net/bond0/bonding/slaves

to remove the interface ovn0 as a slave from the bonding interface bond0.

Ensure Directory is Not Written to If Not Mounted

One trick to ensure that an underlying mount point directory is not written to if it is not yet mounted is to change its permissions to 000 effectively making the underlying directory inaccessible.

This is sometimes useful in scenarios where services are brought up on boot later than the filesystem is initialized such that a remote mount via CIFS or NFS might fail and the services being brought up will start writing to the local filesystem instead of the remotely mounted share.

PAM: permit execution without password

The following line:

auth sufficient pam_permit.so

can be prepended to any file for commands on daemons within /etc/pam.d/ in order to allow passwordless logins.

Using the SystemD Out of Memory (OOM) Software Killer

The typical Linux mitigation for OOM conditions is the "Out of Memory OOM Killer", a kernel process that monitors processes and kills off a process as a last resort in order to prevent the machine from crashing. Unfortunately, the Linux OOM killer has a bad reputation by either firing too late when the machine is already too hosed to be able to even kill a process, either by "really being the last resort" meaning that the OOM killer will not be too efficient at killing the right process and wait too long while heavy processes are already running (desktop environment, etc).

The following packages can be used to add an additional OOM killer to systems within a Docker swarm, all of these being userspace daemons:

systemd-oomd,oomdorearlyoom

Furthermore, the following sysctl parameter:

vm.oom_kill_allocating_task=1

when added to the system sysctl, will make Linux kill the process allocating the RAM that would overcommit instead of using heuristics and picking some other process to kill.

Using the Hangcheck-Timer Module as a Watchdog

The hangcheck-timer module is developed by Oracle, included in the Linux kernel and is meant to reboot a machine in case the machine is considered stalled. In order to do this, the module uses two timers and when the sum of delays for both timers exceed a specified threshold, the machine reboots.

In order to use the hangcheck-timer module, edit /etc/modules and add the module:

# /etc/modules: kernel modules to load at boot time. # # This file contains the names of kernel modules that should be loaded # at boot time, one per line. Lines beginning with "#" are ignored. # Parameters can be specified after the module name. hangcheck-timer

to the list of modules to load at boot.

Then create a file placed at /etc/modprobe.d/hangcheck-timer.conf in order to include some customizations. For xample, the file could contain the following:

options hangcheck-timer hangcheck_tick=1 hangcheck_margin=60 hangcheck_dump_tasks=1 hangcheck_reboot=1

where the module options mean:

hangcheck_tick- period fo time between system checks (60s default),hangcheck_margin- maximum hang delay before resetting (180s default),hangcheck_dump_task- if nonzero, the machine will dump the system task state when the timer margin is exceeded,hangcheck_reboot- if nonzero, the machine will reboot when the timer margin is exeeded

The "timer margin" referred to in the documentation is computed as the sum of hangcheck_tick and hangchck_margin. In this example, the system would have to be unresponsive for  in order for the

in order for the hangcheck-timer module to reboot the machine.

Trim Journal Log Size

As rsyslog is being replaced by journald on systems implementing SystemD, some defaults are being set for journald that might not be suitable in case the machine is meant to be used as a thin client. Debian, in particular, seems to set the maximal log size of  which is absurdly large if a thin client is meant to be created.

which is absurdly large if a thin client is meant to be created.

In order to set the log size, edit /etc/systemd/journald.conf and make the following changes:

[Journal] Compress=yes # maximal log size SystemMaxUse=1G # ensure at least this much space is available SystemKeepFree=1G

where:

Compressmakes journald compress the log files,SystemMaxUseis the maximal amount of space that will be dedicated to log files,SystemKeepFreeis the amount of free space to ensure is free

After making the changes, issue the command systemctl daemon-reload in order to reload and apply the changes.

List Connected Wireless Clients

When using the hostapd daemon, the clients can be queried by running the command:

hostapd_cli -p /var/run/hostapd all_sta

but for that to work the /var/run/hostapd directory has to be enabled in hostapd.conf because it will create a socket that will be used to query the status.

The following changes have to be made:

ctrl_interface=/run/hostapd ctrl_interface_group=0

where:

/run/hostapdis where the directory is placed,0references the root group such that only the root user can access

Transforming Symlinks Recursively into Real Files

The following command:

find /search -type l -name link -exec rsync /path/to/file {} \;

where:

-type lis the type of file forfindto search meaning a symlink,-name linktellsfindto find files namedlink,rsync /path/to/file {}instructsrsyncto copy the file at/path/to/fileonto the files namedlinkin the path named/search

This works due to the default behavior of rsync that does not recreate symlinks by copying files but instead transforms the copied file into a hard file.

Mapping Disk Manager Block Devices to Block Devices

Sometimes errors are reported by the kernel by referencing drives using disk manager nodes (dm) but in order to fix the issue a block device (ie: sd...) would be more useful. The following command will list disk manager nodes to block devices such that they can be fixed:

dmsetup ls -o blkdevname

Running the Filesystem Checker Before Mounting Filesystems

One of the problems on Linux with fielsystems is that if they fail to mount on boot then they are marked as failed and all services that depend on the filesystem will also fail to start. Typically, the resolution is to run the filesystem checker, repair any damage and only then mount the filesystem. Most of the time, any damage can be repaired, however there is very little control or practical decision making left up to the user when the filesystem is repaired with the decisions bouncing between fixing some damage or not. The former applies to the ext series of filesystems.

WIth that being said, the following systemd service file will check the filesystem before mounting it simply by running the filesystem checker fsck with the -y parameter that will make the filesystem checker repair any damage automatically without asking or prompting the user. Note that, modernly, for large filesystem, passing -y and ignoring all prompts while repairing is the "normal" way of performing filesystem checks, due to the storage space being so large that it would be impratical albeit useless to prompt the user whether to accept that some filesystem node be repaired or not be reapaired.

Even though a mounting systemd service type exists as [Mount] the mount section does not have any hook that would allow the user to run a command before or after mounting a filesystem. Instead, the following service file uses the oneshot systemd service type and then runs the filesystem checker using ExecStartPre.

[Unit] Description=mount docker DefaultDependencies=no [Service] Type=oneshot ExecStartPre=/bin/bash -c "/usr/sbin/fsck.ext4 -y /dev/mapper/docker | true" ExecStart=/usr/bin/mount \ -o errors=remount-ro,lazytime,x-systemd.device-timeout=10s \ /dev/mapper/docker \ /mnt/docker [Install] WantedBy=local-fs.target

In order to be invoked as part of the systemd boot and be invoked when the local filesystems are mounted, the systemd file uses the local-fs.target within the [Install] section. The former will ensure that the service file will run at the same time when the local filesystems are being mounted. Note that prepending true to the fsck command will ensure that any non-zero exit status from fsck will not prevent the filesystem from being mounted.

Rescan Hotplugged Drives

Even though hotplug should be working via udev and HAL, sometimes newly inserted drives or removed drives for that matter do not show up and it is necessary to issue a rescan manually. In order to do so, the following command:

for i in 0 1 2 3 4 5 6 7; do echo "- - -" >/sys/class/scsi_host/host$i/scan; done

will issue a scanning request to all SCSI hosts on the system - this includes ATA drives as well with SCSI meaning the all-encompassing high level standard.

After the command is issued a command like lsblk should be able to show any changes.

Shred and Remove Files

| Command Line Aspect | Visual Mnemonic Graft |

|---|---|

-f -u -n 1 |  |

There are multiple solutions for wiping files before deleting and perhaps the most systematic one is bcwipe due to the algorithms that it implements. Without installing any new tools, the shred tool on Linux should do the job but with the only drawback that the command cannot recurse a filesystem tree such that it should be called using a tool like find. The following command:

find . -name '*.delete' -exec shred -f -u -n 1 '{}' \;

will perform one pass of random data across the entire will -n 1, will change any permissions in case the file needs a permission change -f and will unlink / delete the file after shredding it -u.

Search for Filenames Containing Characters Incompatible with the Windows NTFS Filesystem Names

From a stack overflow question, the following command will use find to search for filenames that would not be compatible with the NTFS filesystem:

find . -mindepth 1 -name "*[<>:\\|?*]*" -o -name '*[ \.]'

The main usefulness of the command is to determine whether files could be tranferred from a Linux filesystem to an NTFS filesystem, for example, for the purpose of making backups.

Instantly Remove a File or Directory on Linux EXT

Typically, to remove a directory, one would use a combination of tools like rm, rsync or others but if the filesystem is an EXT variant, such as ext2, ext3 or ext4, there exists an instant way to remove a directory.

debugfs -w DEVICE -R 'unlink PATH'

where:

DEVICEis the hard-drive partition expressed as a device node, for example,/dev/sda,PATHis the path to a file or directory

The command can be used with mounted filesystems too but after running the command, a reboot is recommended for the filesystem to clear up.

Usage patterns usually fall in line with situations where huge directories exist full of files such that deleting every single file manually would take days.

For the contact, copyright, license, warranty and privacy terms for the usage of this website please see the contact, license, privacy, copyright.