Table of Contents

The Principle of Least Surprise

In computer engineering, more precisely pertaining more to the design of user interfaces, the "principle of least surprise" is a design principle where an interface is supposed to be similar to previously designed interfaces for the sake of continuity.

Documentation vs. Application Programmer Interface

One of the giant problems of our times is the lack of "documentation" for seemingly good software which in turn makes the software itself unusable in many cases. Even though automated tools are available for generating Application Programmer Interface (API) documentation, more than often, developers consider that the API itself is equivalent to documentation. This is applicable to the very many software packages that use Swagger and other tools to generate API documentation, especially where REST and other interoperability is involved. In reality, documentation is a superset of the API and involves much more than an API can provide such as:

- coding examples,

- case scenarios,

- limitations,

- etc

that are essential to any developer thinking to use the software as a component in their own workflow. Documentation is tough to write and needs some dedication to maintain given the changes between various releases of a software package such that it is no wonder the developers just prefer the tool output that generates API documentation instead of dedicating time to write proper documentation.

Either way, as it stands, it is important to remember that "documentation" is not synonymous with "API documentation" and that "documentation" consists in a superset that includes "API documentation" but along with much more.

Contextualizing Storage Technologies by Usage Patterns

One of the ideas being discussed in the NAS transformation of a hard-drive enclosure coupled with a mini/micro-PC is the idea of split-storage for different purposes that is worth mentioning separately. The idea is that very large and low-cost storage is typically to be found in formats that are not that technologically advanced, for example, in terms of money per byte, spinning platter disks with a 3.5" format will always be way cheaper than, say, a NVME device of a comparable size. This means that when talking about extremely large storage quantities, the costs for an NVME-like storage device will rise exponentially whereas a very classic spinning drive will increase more linearly with the amount of storage it provides. You could go the Riche-Rich way and blow away all the money and store everything on NVME but given the differences between technologies and scaling prices, that is just deliberately wasting money for no reason.

Another concern is wear-and-tear where it does not make too much sense to use a high-cost and very fast storage medium whose cycles would end up diminished by being hammered with writes of discardable data such as log files or temporary downloads that need to be cleaned up and are also fairly expendable. Similarly, hammering spinning disks with write cycles, just like any other medium, diminishes their lifespan and for most usage patterns it does not make sense to, say, not split the operating system from the large spinning drive and place files on the same storage medium. One critical application where we have found this storage contextual split very useful has been the IoT automatic recording with Desktop notifications project where it was found that automatically-stored video clips were all extremely volatile but also required a high IO usage pattern. The camera would continuously record in many ways, only stopped by motion but most of the recordings were unimportant or false positives and could just be thrown away such that it became a form of technological "sacrilege" to abuse expensive storage with minute-by-minute IO from storing camera footage that would even automatically end up deleted. In order to not only loosen the usage of the storage devices, but to also optimize the recordings, it was decided to use a temporary filesystem in RAM to store the recordings temporarily and only after being curated, the recordings could have been committed to long term storage. Not only did that alleviate write cycles but it was also some sort of speed-optimization of saving video streams because saving video clips to RAM did not create any bottleneck and the videos that were stored to RAM never ended up being corrupt. So, in some ways, it was determined that an ideal NVR would benefit from a large amount of RAM to temporarily store recordings that only after being curated by a human, for example, following an incident, should the recordings be relocated to long-term storage.

The next-up observation for this idea is that various usage patterns require or would allow different types of storage such that the cost of storage can be optimized contextually. Let's say, a Linux operating system filesystem could even run as a read-only storage due to only very few parts of the operating system that require write access to the drive, for instance, log files or other temporary files that even have a temporary profile and could instead by piped over the network to some centralized service that can store and analyze them. There is another problem regarding storage and that is the "myth" of "cheap RAID", which is a "blasphemic" concept starting with the unavailability of hardware technology like NCQ for SATA drives and up to Mean-Time-Between-Failures (MTBF) of commercial drives that is simply trash compared to industrial storage that is very expensive. The idea that you can buy several "cheap" SATA drives, maybe even external drives that are cheaper, use a screwdriver to pop out the SATA drive and then build a "cheap" RAID, simply does not hold with the quality of these SATA drives actually matching the usage pattern that they were designed for, namely as external drives to just store some files "now and then" but not really, say, to be part of a ZFS tank that re-silvers all the time! The thing is that even if MTBF could provide a probabilistic model that would allow determining when a drive would fail, the MTBF does not account for random failures that given large-scale production seem ever more predominant. Random failure in this context would be, for example, a very expensive NVME that just stops working for no reason as a gross underestimation of its MTBF and not as a consequence of its usage cycle. This means that investing in expensive storage and then using that storage for purposes that either destroy the medium for no reason (ie: hammered with log files that are not even read) artificially raises the price of the project artificially for the very reason of misunderstanding the various usage cases of different technologies. While MTBF relies on some preset environmental conditions, it also the case that the environmental conditions used for determining the MTBF rarely meet the environment when the product is used and with lots of external conditions that turn out to have a massive influence on the actual failure rate. For example, when gathering the motivation for dropping RAID solutions in favor of a monolithic build during the NAS transformation one of the sources of inspiration has been a relatively old write-up on the Internet from AKCP that showcased a paper from National Instruments that would claim that exceeding the thermal design range of a hard-drive even by 5C would be the functional equivalent of using the drive for more than two years continuously which shows that environmental parameters have a hefty effect on hardware. Interestingly, the observation based on the environment is that environmental parameters are never even semantically perceived as part of the usage pattern of hardware but rather something that varies wildly between applications.

For Servarr usage, a "download" folder or partition is typically a very frequently-accessed folder that is also fairly dirty in terms of stability with lots of broken downloads that need to be cleaned periodically and most of the data within that folder being a hit-or-miss ala Schroedinger's cat where a download either succeeds, in which case it can be moved to permanent storage or it is a failed download, in which case it is a giant wear-and-tear bomb. Fortunately, deleting a file on an operating system does not additionally imply zeroing out the bytes but rather just unlinking the file node from the filesystem tree such that the data could be overwritten but even so you have to consider that some downloads are large and can reach up into the 100s of GB which is byte data that ends up committed to the drive and, in case the download is not what expected, those 100s of GB just end up deleted as garbage while burning into the storage cycles for no useful purpose. A "download" folder hence would be very different in terms of storage constraints than long-term storage or even the root filesystem of the operating system that runs the software, which means that the underlying technology could, and more than likely should, be different. We would unapologetically recommend storing downloads on a cheap USB thumb drive that is just connected via an USB port. Modernly USB thumb drives reach up into the terabytes and the flash storage is fairly cheap but also not great in terms of performance. Furthermore, downloads should only be a temporary buffer and the total space requirements should only scale, say, with the seeding requirements specified by various trackers, but holding onto failed downloads and other garbage just fills up the drive for no purpose. In the case of catastrophic failure of the USB drive, the drive can just be tossed whereas if the downloads were placed on some expensive NVME then tossing out the NVME would not have been too great. As a fully-working example, for a Servarr stack, one could settle, maybe, with a read-only root filesystem, an extremely cheap USB drive for say up to 1TB to store the "download" folder on and a large 3.5" hard-drive to store the final files after they have been processed by the Servarr stack.

Another good example where it is hinted to that a filesystem should be contextually or semantically partitioned given its varying usage is installing the Debian Linux distribution where one of the prompts asks whether all the files should go into the same partition and marks the option as "beginner" which shows that a correct planning is usually performed where various sub-trees of the filesystem are mapped to different storage devices with varying properties. One of the common-practices, for example, is to relocate ephemeral files such as log files or temporary files in RAM via tmpfs and it is clear that the FSH can be partitioned to mount various top-level directories like /var or /tmp on different storage mediums while even ignoring the typical corporate diskless setups via NFS and NIS but just for the purpose of saving up money on storage by not being ignorant about storage mediums and their recommended usage patterns.

Security Anti-Patterns

The following section describes security anti-patterns exhibited in various environments where security for the sake of security has the effect of hampering development or counter-intuitively making a system even less secure.

Requiring Specific Permissions or Numeric Group or User IDs

Some opensource software packages have had updates over the years that pertain to security with the following being the two main highlights:

- software that refuses to run unless dependent files are given a specific set of permissions,

- software that refuses to run in case it runs under a privileged account such as the

rootaccount

with both of these expectations consisting in shameless anti-patterns.

First, requiring permissions for dependent files makes the software dependent on a filesystem that is able to have permissions in the first place which does not cover all filesystems but rather a restricted set of filesystems with most of them being meant for multi-user systems.

For example, NTFS does not have a corresponding POSIX compatibility layer that would allow a mounted NTFS filesystem to store Linux or POSIX permissions which means that an NTFS filesystem mounted on Linux will default to allowing all users to read-and-write each and every file. A good instance of the anti-pattern consists in daemons that then require certain files on the filesystem to be given a certain set of permissions, for example, the MySQL daemon will refuse to read configuration files if they are world-readable even though, the functionality of MySQL itself is not contingent upon the permissions of its configuration files. This leads to a problem where the MySQL health-check script healthcheck.sh will never work for an MySQL daemon running on top of a Linux-mounted NTFS filesystem, even if the MySQL daemon runs within a Docker container, given that bind-mounts just pass permissions through. Instead, healthcheck.sh will error with:

Warning: World-writable config file '/var/lib/mysql/.my-healthcheck.cnf' is ignored ERROR 1045 (28000): Access denied for user 'root'@'localhost' (using password: NO) Unknown error Warning: World-writable config file '/var/lib/mysql/.my-healthcheck.cnf' is ignored ERROR 1045 (28000): Access denied for user 'root'@'::1' (using password: NO) healthcheck connect failed

and simply refuse to run at all.

Second, requiring that the daemon runs under this-or-that privileged or unprivileged account is a matter of relativism given containerization. Typically, software runs within Docker containers as root because they are launched from the very init script that starts when the container starts, but the root account within the container does not map to the root outside the container and given that one of the explicit goals of containerization being privilege separation, the requirement from a software daemon to not run under a particular user, let alone root, is as much superfluous as an impediment to interoperability with other components.

Lastly, both of these requirements massively decrease the portability of the software itself, with the code having to branch on the platform, and, in case the software runs under Linux, to add an exception and check whether this-or-that file has certain permissions or whether the daemon runs under a user in particular. This is very ugly, is based on assumptions about the layout of the operating system, precludes the goals of containerization and defeats the purpose of writing platform-agnostic portable code, just for the sake of cargo-loading some security trope.

Outsourcing

Outsourcing security has the implicit effect of inheriting all the flaws, habits, incompetences and particularities of the target enterprise that the security is outsourced to. For example, Cloudflare's "security" is inherited from Project Honeypot, that long ago used to be a moderate blacklist of IP addresses that would contain machines across the Internet that were either known to be compromised or have participated in a recent attack on the Internet. Unfortunately, blacklists are not used at all anymore in 2025, even by pirates because they are unreliable given that TCP/IP defines an IP address as public information and not personally-identifying information. What happens often, for example, is that many regions of the planet have clients behind carrier-grade NAT, where a whole building or even smaller settlement are routed through a single IP address, which makes attributing behavior to that IP address very shallow given that the IP address masks a large number of clients. We had many times to lower the security settings of Cloudflare ourselves because Cloudflare kept blacklisting Chinese IP addresses but those addresses eventually ended up migrating to other customers that then became blocked.

The same applies to hardware, for example, Fortigate, founded by two Chinese brothers is a hardware-level firewall that can perform things such as deep-packet inspection or work as a transparent seamless proxy. Ironically, the default blacklist supplied by Fortigate blocks all major human rights organizations such as transparency.org but without any reason in particular. Whilst that should be more or less irrelevant for most purposes, it does not match the context where the hardware is meant to be used, say, for people that work for human rights organizations, research or journalism because the firewall blocks any human rights activism by default.

Similarly, both solutions blanket-ban anonymizing networks such as "tor" or "i2p", or attempt to do so via deep-packet inspection, but mainly due to these companies having a contradictory dual-stance where they monetize security but at the same time claim to provide security, such that whilst an anonymizing network like "tor" might be secure, it also alludes the data collection that Cloudflare performs and is thus inconvenient business-wise. There is little reason to block anonymizing network, except for the fact that they would not provide very good data if intercepted because in terms of bandwidth these networks are unable to carry out large-scale attacks and would collapse way before. For example, "tor" on its own does not even support torrenting because the bandwidth would saturate the network to the point of being unusable while "i2p" implements torrenting but only within its own "i2p" network with not contact to outside trackers.

One global phenomenon that pertains both to computer security and to physical security in general is that for most of these bulk-security companies the very top-level organizations are granted in many case blanket-passthroughs to the point of being completely whitelisted. Google, for example, is a company that is mostly whitelisted and bypassed by blacklisting filters because it is deemed to be too large to be a security risk. This relative judgement ends up with funny consequences such as Google vans being allowed right about anywhere or spam filters outright whitelisting GMail to the point that some percentage of spam ends up coming from GMail itself for the very bonus of being able to bypass most automated spam filters via the gratuitous reputation.

Both of these examples just go to highlight that outsourcing security, particularly when the checks being implemented rely more on a matter of preference, is a heavy anti-pattern and that anyone would be better off implementing their own security policy, protections and response mechanisms. This is why some of the more valuable blocklists being generated dynamically as attacks happen and have a fast expiry time per entry such that they can be used as a first response buffer without casting a too tall a shadow onto IP address that are not stable by definition.

Copying Large Files

Copying large files is a time consuming operation using regular command-line tools like cp, dd, copy or just copying files using a graphical interface, because these tools are meant to perform a bit-exact copy of a file. The concept of "copying files" reaches back to Kernighan & Ritchie's "The C Programing Language", with the most basic example being found in chapter 1.5.1 "File Copying":

#include <stdio.h> /* copy input to output; 1st version */ main() { int c; c = getchar(); while (c != EOF) { putchar(c); c = getchar(); } }

which copies standard-input to standard output by reading from stdin character-by-character (4 bytes). Most tools, regardless how sophisticated, follow the same pattern more or less. For example, dd just adds some control in terms of skipping some bytes from the input before actually starting to copy or copying an exact number of bytes and then terminating.

Nevertheless all these tools do not account for a situation where two files already exist and the user would just like to synchronize the changes between a source file and a destination file. Imagine that two large files exist, such as two ISO files, and the user would like to copy or move one ISO file onto the other (perhaps the other one is "broken"). When the copy or move operation is started (moving files just being more or less a matter of copying and then deleting the older file), regardless whether the copying is performed on the console or using the graphical interface (like Explorer in Windows), the operations performed could be reduced to the simple K&R example where the ISO file is copied onto another ISO file by copying all bytes.

In case two bytes between the source and the destination ISO files are identical, then the copying tool completely disregards this detail and just blindly copies the data over, by overwriting the existing blocks with, well, the exact same data. While this might have been acceptable many decades ago due to storage being extremely expensive and scarce and files consisting in mainly documents or being short in terms of length, modernly copying over existing data on top of the very same existing data is a very inefficient operation that aside from the massive waste of time might also have the impact of reducing the lifespan of the storage medium.

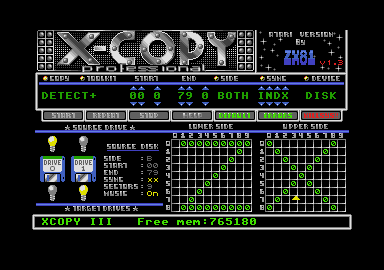

Curiously, many decades ago a cracking utility named X-Copy appeared on the Commodore Amiga scene that boasted its capabilities of performing a sector-exact copy between two floppy disks.

The utility of such a program pertained to recovering data from broken mediums or copying diskettes that had some software copy protection that would be spent in case the diskette would be copied the regular way. X-Copy had a set of features where it could skip identical sectors, perform exact copies of sectors by hashing and re-reading the sectors till they are identical and quickly became a referential tool to copy diskettes to the point that everyone had to have a copy of X-Copy.

In modern times where storage is abundant and files can be large, one can think of the problem in terms of streams where not only would there be bit-by-bit similarities between two large files but rather entire sequences or sub-sequences of bits between a source and destination would be identical, such that copying over data that already exists results in a waste of time and the burning of the life-cycle of the storage medium.

While the Commodore Amiga came and left like an alien landing on the planet and then vanishing, many years later a tool called rsync came to be, as if nothing using the same principles ever existed, that managed to create differences between a source and destination and then only copy over those differences without copying over all the data if it already existed on the destination. CVS, the predecessor to Subversion, a predecessor of more modern Git, did not have the capability of telling the difference between two binary files and Subversion was the first source-code management tool that managed to only store binary differences between revisions, such that when Subversion came out, CVS was abandoned quickly because repositories hosted on CVS would double their size every time binary files were committed between revisions (in fact, it became a rule of "good practice" to not commit binary data on CVS and keep them separate with only source-code being committed to CVS). At the same time, the cracking scene promoted tools such as bsdiff, a tool that is able to create patches, by comparing two files and then generating a binary delta between time, very much like the simple diff tool does for source code. Torrents are great technological showcase that have a built-in awareness of the bitwise layout of a file, such that large files are transferred by segmenting the file into pieces and then only requesting pieces of the file from peers, thereby overall speeding up the operation by eliminating single-points of failure where the source might stall or go away.

With all things considered, all these tools had the capability to create a difference file between two binary files and only store or apply the difference such that already existing blocks that were the same between a source and destination would not have to be copied or updated in any way.

To this date, it is quite surprising that the notion of "copying files" has not been beefed up and that "innovation" in this area remains moot, when, the former history has been established and there exist a theoretical background as well as referential tools like rsync that are able to work with differences, whether binary deltas or entire source-destination differences in order to minimize the expenditure and time spent copying files.

Windows, for example, regardless of its hyped up releases of Windows 10 and then 11, does not innovate on this topic at all, and the "file copy" operation is the same as it ever was since the very start of "The C Programming Language" by Kernighan & Ritchie. Copying a large file on top of another large file on Windows, even if the files are very similar on a binary level, is a dumb operation where Windows sets a cursor at the beginning of the source file and then churns all the way to the end while copying everything from the source to the destination while disregarding any similitude between the files.

Luckily there are ways to perform partial copies between two files manually, by comparing them and generating file differences that can then be applied on top of the destination such that only differences are changed. The tools to mention are:

- bsdiff used for the entirety of the cracks section on our website,

- rdiff,

- xdelta

- xdelta3 - just like xdelta but more cross-compatible by implementing VCDIFF-compatible patches

xdelta3 is perhaps the most recent and also available on Windows where it is popular in the ROM cracking scene where people modify games. Using these tools is very similar to each-other with the workflow being along the lines of first creating a patch between two files and then applying the patch to the destination file.

For the purpose of copying over a large binary file, the procedure can then be reduced to:

- assume two large binary files exist,

and

and  where

where  is the source respectively

is the source respectively  is the destination file with the user wanting to copy

is the destination file with the user wanting to copy  on top of

on top of  ,

, - generate a file that contains the difference that is necessary to transform the source file

to the destination file

to the destination file  , and let that difference be

, and let that difference be  such that

such that  ,

, - apply the difference

onto file

onto file  in order to obtain a modified file

in order to obtain a modified file  that represents the current contents of file

that represents the current contents of file  but with the difference from file

but with the difference from file  applied to the file, or, in other words

applied to the file, or, in other words

which describes theoretically the working mode of these tools. There are some properties here, namely:

- the difference file

transforms file

transforms file  to

to  where file

where file  contains some subset of data from file

contains some subset of data from file  ,

, - iff. file

and

and  are the same size then,

are the same size then, - the difference function

, can be reduced to a simple bitwise subtraction between two bytes of file

, can be reduced to a simple bitwise subtraction between two bytes of file  and

and  or in other words the difference between the files

or in other words the difference between the files  and

and  represents a set of bytes

represents a set of bytes  where each byte is linearly subtracted between the destination and the source

where each byte is linearly subtracted between the destination and the source  ; applying the patch then implies performing the symmetric equivalent over subtraction, namely adding the bytes to

; applying the patch then implies performing the symmetric equivalent over subtraction, namely adding the bytes to  in order to obtain

in order to obtain  , with the mention that any byte difference from

, with the mention that any byte difference from  that is equal to zero for a given index

that is equal to zero for a given index  will be a skipped when the patch is applied (this represents the actual gain, in terms of performance, given that this operation is skipped and no data is transferred),

will be a skipped when the patch is applied (this represents the actual gain, in terms of performance, given that this operation is skipped and no data is transferred), - the time complexity of generating a path file is just a linear progression that scales with the number of bytes of the files identical in size, or

where

where  represents the number of bytes,

represents the number of bytes,

- looking at the variables, the source file

, the destination file

, the destination file  and a patch file

and a patch file  , it is interesting that after the patch file

, it is interesting that after the patch file  is generated, then the source file

is generated, then the source file  becomes a free variable for applying the patch

becomes a free variable for applying the patch  and the only other variable bound for the patching operation is the destination file

and the only other variable bound for the patching operation is the destination file  ; this means that the source file can even be discarded but that the current file

; this means that the source file can even be discarded but that the current file  to patch must remain the same until the patch

to patch must remain the same until the patch  is applied

is applied

One could go on but the gains seem clear, so here is an instantiation as an example of restoring a file from a previously stored BtrFS snapshot. Namely, when a snapshot exists, in order to restore a large file, from the point of view of BtrFS, restoring the file is a matter of just copying it over from the snapshot folder, however since the file is presumably very large (assume for example, a terrabyte large file, or a disk image in terms of hundreds of gigabytes) and we would like to restore the file without copying it entirely, then a difference delta file is first created between the snapshotted or backed up file and the current file:

xdelta3 -e -s /mnt/volume/.snapshots/20250811/S /mnt/volume/S /tmp/dS.xdelta3

where:

/mnt/volume/.snapshots/20250811/Sis the path to a file within the BtrFS snapshot volume that the user wants to copy,/mnt/volume/Sis the path to an existing file onto which the user would like to copy the source file,/tmp/dS.xdelta3is the path to a file where the difference between/mnt/volume/.snapshots/20250811/Sand/mnt/volume/Sshould be stored

After the file /tmp/dS.xdelta3 is generated by the xdelta3 tool, then the file /tmp/dS.xdelta3 can be applied onto /mnt/volume/S which is the destination file onto which the user wanted to copy the snapshotted or backed-up file /mnt/volume/.snapshots/20250811/S:

xdelta3 -d -s /mnt/volume/S /tmp/dS.xdelta3 /mnt/volume/S

where:

/mnt/volume/Sis the file to modify,/tmp/dS.xdelta3is the file containing the delta difference

which would represent an in-place patch.

Otherwise, the process of transferring two large directory trees whilst minding data that might already be transferred is covered by tools such as rsync that, iff. the -W (whole file) parameter is omitted, then rsync will perform file checks and then update files based on their differences:

rsync -vaxHAKS --partial --append-verify --delete /source /destination

/sourceis a source directory,/destinationis a target directory

The parameters --partial and --append-verify ensure that file transfers can be resumed in case they are interrupted, namely, the flag --partial allows preserving partially transferred files (like large files when the transfer is interrupted, in case "rsync" is closed or crashes) such that when the transfer is issued again --append-verify will check whether the hash of the data in the source file matches the hash of the data in the interrupted partial file, and iff. the hashes match, then "rsync" will resume transferring the file by appending to the end of the file.

Note that rsync just linearly compares two files to determine where to seek into the partially transferred file in order to continue copying the file. With --append-verify, in case there is a difference between the source and the partially transferred file, for the size of the partially transferred file, then rsync will resort to transferring over the whole file again without creating a patch file. An even more dangerous option that is deprecated is --append which just blindly copies over the source file by appending to the end of the partially transferred file, which will generate non-equal copies between the source and destination in case either the source or the destination has been changed in the meanwhile, which is why --append-verify is preferred.

Teracopy on Windows manages to account for file pieces and then uses multiple threads in order to transfer larger files, more than likely with the theoretical hope of the copying threads to be distributed among the CPUs or cores thereby, just like torrents, eliminating the possibility that a single thread might be outscheduled on an operating system and eliminating a single point of failure. However, as far as we know, Teracopy does not implement delta transfers so in terms to transferring already existing files, more than likely Teracopy acts like the Unix rsync tool that appends at the end of the file in case the partially transferred file matches the source for its file length, in order to implement its "resume" feature.

Historically, at the inception of the WWW, tools that were made for downloading, like "wget" (or later "curl") did not even have a way to resume partial transfers which made downloading a nightmarish operation when you were on dialup and the phone accidentally hung up such that you'd have to dial the ISP again and then restart the transfer from the very beginning. HTTP technology has since changed with Range Request (seek into file and partially transfer) and Chunked Transfers (transfer pieces) and wget got its -c parameter allowing a large transfer to be resumed. What is interesting between a tool like wget and Teracopy or rsync is that resuming wget relies on first checking the local file for its size, asking a HTTP server for the source file size in order to perform some arithmetic and then determine from where the source file should be requested from the HTTP server in order to resume the transfer. However, up to this date, there is no built-in hashing ala "rsync" within the HTTP protocol, so compared to rsync, wget only relies on arithmetic to determine whether the source file has changed and, if it has, then wget just restarts the download. This means that wget has the weakest resume of all the tools and that is because its resume operation is contingent upon the HTTP protocol that only implements reply to requests to transfer pieces of files, such that wget does not have the possibility to check the consistency of the transfer.

Maybe in ulterior versions of HTTP, delta transfers will be implemented, which is what the current development is being geared to with the notion of distributed websites that leverage torrent technology as a transfer technology. One very cute free tool to use to watch movies is Popcorn Time that works a treat for streaming movies directly using torrent technology because all that the client has to do is to transfer movie file pieces in linear and contiguous slabs of data while prioritizing the pieces closer to the current movie cursor compared to the pieces to be found later in the file.

Separation of Concerns

Separation of concerns is a design principle that consists in contextually isolating different parts of a system into sub-units with the purpose of managing each sub-unit of the system individually in part. The notion is attributed to Djikstra, but more than likely it shouldn't because the concept is applicable to any engineering system, even electronics and it represents historically a shift in design, from monolithic all-in-one builds to modular systems.

For instance, even though seamless to the user, the Linux kernel does not include a process starter such as init, yet the kernel is only responsible for identifying hardware using drivers and then passing on that information and control to user-land programs. In that sense, the kernel is separate from the actual Linux operating system.

Separation of concerns is applicable at any system level and is frequently mentioned in connection to programming where, in terms of subroutine and then, later on, the more advanced objects programming, there is some decoupling expected where functional units, be they functions or be they classes, are expected to fulfill a certain given purpose instead of the entire code being sprawled out into one single continuous stream of instructions.

Benefits of this design principle range from ease-of-maintenance, going through economics and up to security due to the compartmentalization that offers the opportunity to address issues to various sub-units of a system instead of requiring the expertise to overhaul the entire system as a whole monolithic block.

Micro-Optimizations, Number Crunching and Memory Management

Historically, the Gentoo thesis has been that instead of installing pre-compiled binaries that are suboptimal relative to the platform that the user is installing Gentoo onto, the user should rather recompile everything that they can on the target platform with local optimization specific to the environment. The thesis would have it that, for example, some software compiled with SSE optimizations would greatly outperform the same software on a different platform without SSE optimizations. Just like Gentoo, Linux From Scratch (LFS) went the same way but even more rugged by not even using a framework to recompile packages but rather requiring the user to perform the compiling on their own while being assisted by documentation. In reality, when all the micro-optimizations were added up together, systems that were compiled dynamically for a target platform tended to be marginally faster than generic binaries but, in the case of LFS, the loss of having an actual package manager, the loss of having a system that would be easily upgradeable, the lack of a way to centrally manage configurations, etc, all these losses greatly outweighed the benefits offered by locally-compiled binaries. Contrasted to LFS, in Gentoo's case, it seemed like the time spent compiling software did not make up in terms of gains for individual packages such that whilst local optimizations were made, overall the speedup was negligible in terms of productivity that the user could use the system for.

There is always a fight between, say, "modern" languages and historical languages such as C, many times just due to the fame, difficulty or nostalgia, however people forget that C itself was a very "modern" for the time that it appeared in and that Brian Kernighan and Dennis Ritchie developed C for the main purpose of creating a hardware-agnostic programming language that could run on many platforms. At the time, "programming" involved learning a bunch of very different languages, each being tied to hardware companies that produced their own chips and hence also had their own instruction set; manuals were scarce and difficult to obtain, there was no Internet to obtain extra documentation so the whole process was a mess. With C, on the other hand, very similar to languages and frameworks that can generate portable binaries that run on all platforms, for instance, Java, JavaScript and dotNet, programmers could write a program in C and then use different compilers on different platforms to compile the very same code.

One important argument to remember is that more than often the mathematical principles that govern programming greatly outweigh number crunching when it comes to fundamental principles. We make an argument in the politics section on supercomputing that an inefficient algorithm on very fast hardware might be on par with an efficient algorithm on slow hardware when it comes to the time required to complete the program. Similarly, number crunching tends to make the program more-and-more tied to the operating system and then to the hardware itself such that micro-optimizations tend to obtain a locality and might fail to run either efficiently or at all when the environment is swapped. The point here is that one should rather rely on fundamental principles and attempt to work cursively enough without making recourse to oddities or particularities of the environment, even if not leveraging those characteristics would lead to a slower execution time for given code segments. Just like compiling with local optimizations, the loss of portability of the code, the loss of maintainability, the difficulty to upgrade or make changes, possible mistakes that can be easily introduced, all greatly outweigh any benefits from micro-optimized counterparts. One example that we have experienced fully has been the SecondLife LSL vs. OpenSim LSL bug marathon where it turns out that one of the difficulties in implementing a third-party LSL compiler is that not only do the language semantics have to be ported over but in order to achieve compatibility even the bugs and inconsistencies of the original language would have to be implemented. These inconsistencies that, on either SecondLife or OpenSim platform have been actually been leverage by people creating scripts, tend to make scripts incompatible between the two environments because they rely on micro-optimizations that give a script a sense of locality to either environment.

Would the program to be created be some entertainment program, like a game then bugs are mostly expected, reported and solved, but one thing that you do not want to do is to trust a floor of programmers with a huge code-base that uses manual memory management, that create software to be used for anything critical like banks or ballistic missile control.

sudo vs. su

One of the difference between su and sudo is that su derived from "switch user" can be used to switch the user shell to a different user whilst sudo can be used to execute specific pre-configured commands using a different privilege set.

Otherwise, the utilities are similar in terms of functionality but due to the security tulip-fever, "sudo" is preferred over "su" because "sudo" requires the user to type in their own password whilst "su" requires the password of the target account to be typed - however, this is of course contingent on the /etc/sudoers file being configured to allow a non-privileged user to use "sudo" in the first place, along with all the necessary permissions as restrictive or less restrictive as they might be. Furthermore, a lot of administrator edit /etc/sudoers in order to grant an user full-access to any command, which further defeats the purpose of security itself because it means that iff. that non-vital user account is compromised, then any binary or even becoming "root" is possible. A different source of security fluff is also derived from inexperienced users doing nasty stuff as root, ie: rm -rf / or involuntary fork bombs where clearly the user should not have root permissions (or they're experimenting on their own, which, is fine!).

Most examples that are sprawled on the Internet handle the issue of privileged account requirements wrongly by polluting their own examples with "sudo" written in front of commands that require "super-user" privileges. It is much cleaner to instead tell the users that the commands need to be executed as root, if need be, or otherwise any user that is currently logged-in as a superuser cannot even use those examples because "sudo" will complain even under the "root" account. Either way, that much of a fine-grained fragmentation of privilege separation to have some commands runnable without privileges and some others, for example, in the same command pipe, requiring superuser privileges, is really not required and the user can just get elevate to superuser privileges for the duration of whatever procedure needs to be carried out, followed by deescalating the privileges when the procedure is completed.

"sudo" on the other hand cannot spawn a shell itself and relies on a configuration that allows fine-grained access to executables, groups and users such that "sudo" is best used in environments where certain processes require running a binary that would otherwise be outside the scope of that process. For example, a webserver like Apache would need to execute a binary without Apache itself having access to the required binary - a scenario where the /etc/sudoers file could be updated to allow the Apache user partial access to the required binary. "su" on the other hand, could not even fulfill the same purpose as the one formerly described because it does not have any "partial permissions" at all; "su" just spawns a shell under a specified user (root, if not specified) and then uses the shell of that user to execute the command.

It is a actually a simple decision tree to remember:

- Do you need to become the superuser in order to execute commands that will affect the entire system?

- use

suto become the superuser

- Do you have a program or daemon that needs to execute a binary for which you do not want to set the binary and the daemon itself to the same group and allow group execute permissions?

- use

sudoand configure/etc/sudoersappropriately

Sleeping Efficiently

Depending on the environment, waiting for a long time can be achieved in various different ways. One of the typical ways is to use some a sleep(3) instruction from unistd.h in order to block execution for a very long time. Similarly, on the command line, the sleep command can be invoked with the infinity parameter as in sleep infinity. However, waiting indefinitely with sleep is inefficient on platforms that support the suspension of processes or threads. On POSIX / Linux systems, the SIGPAUSE and SIGCONT signals can be used to suspend a process and then schedule the process to be awoken at a later time.